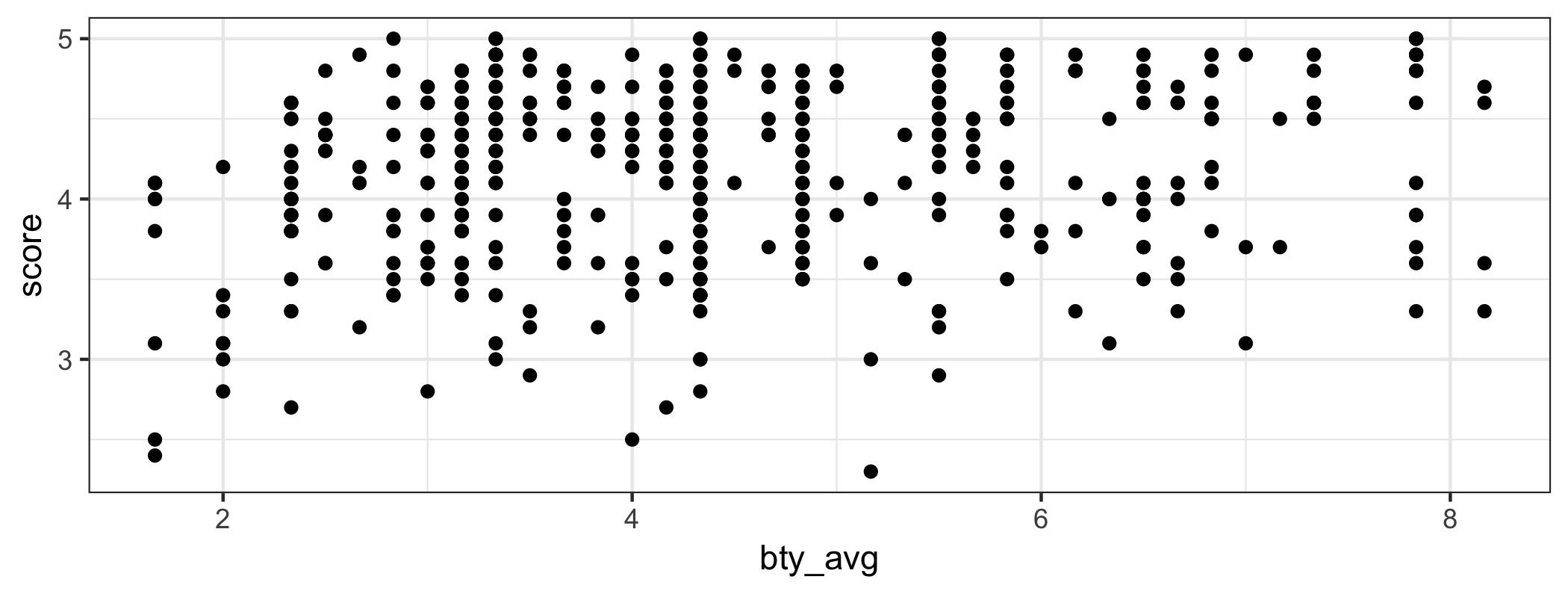

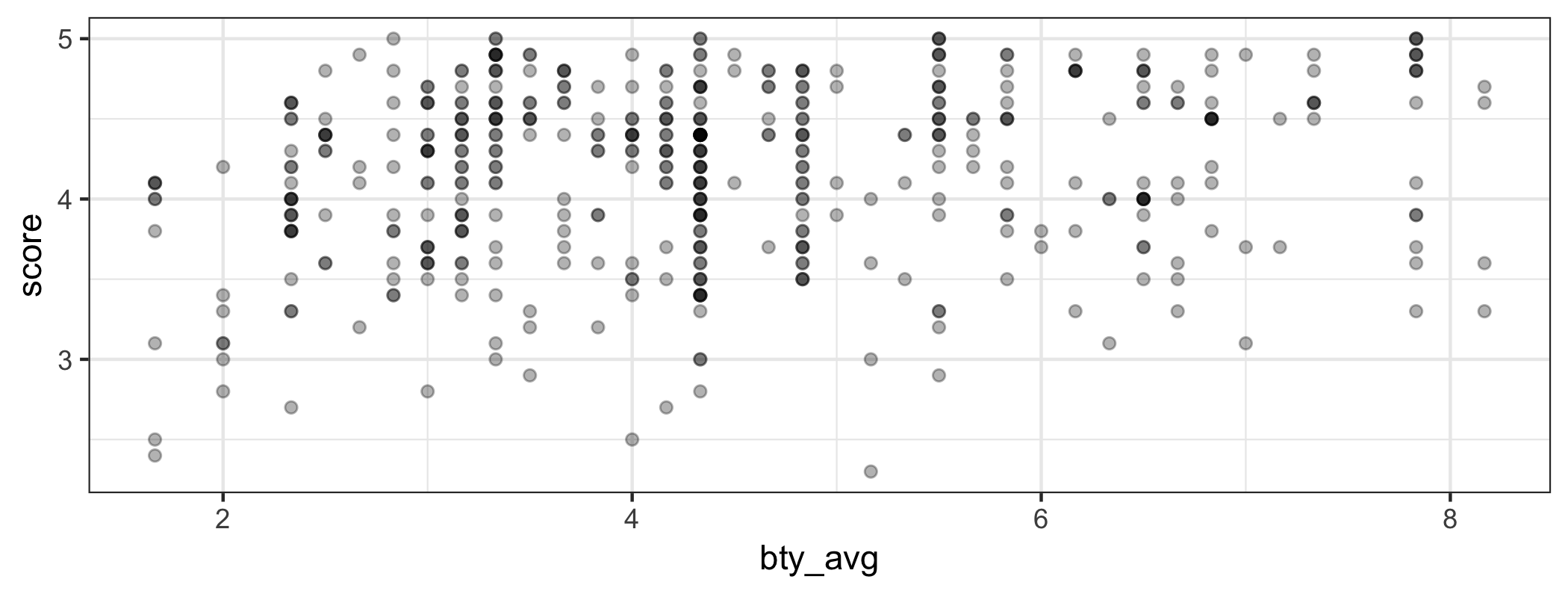

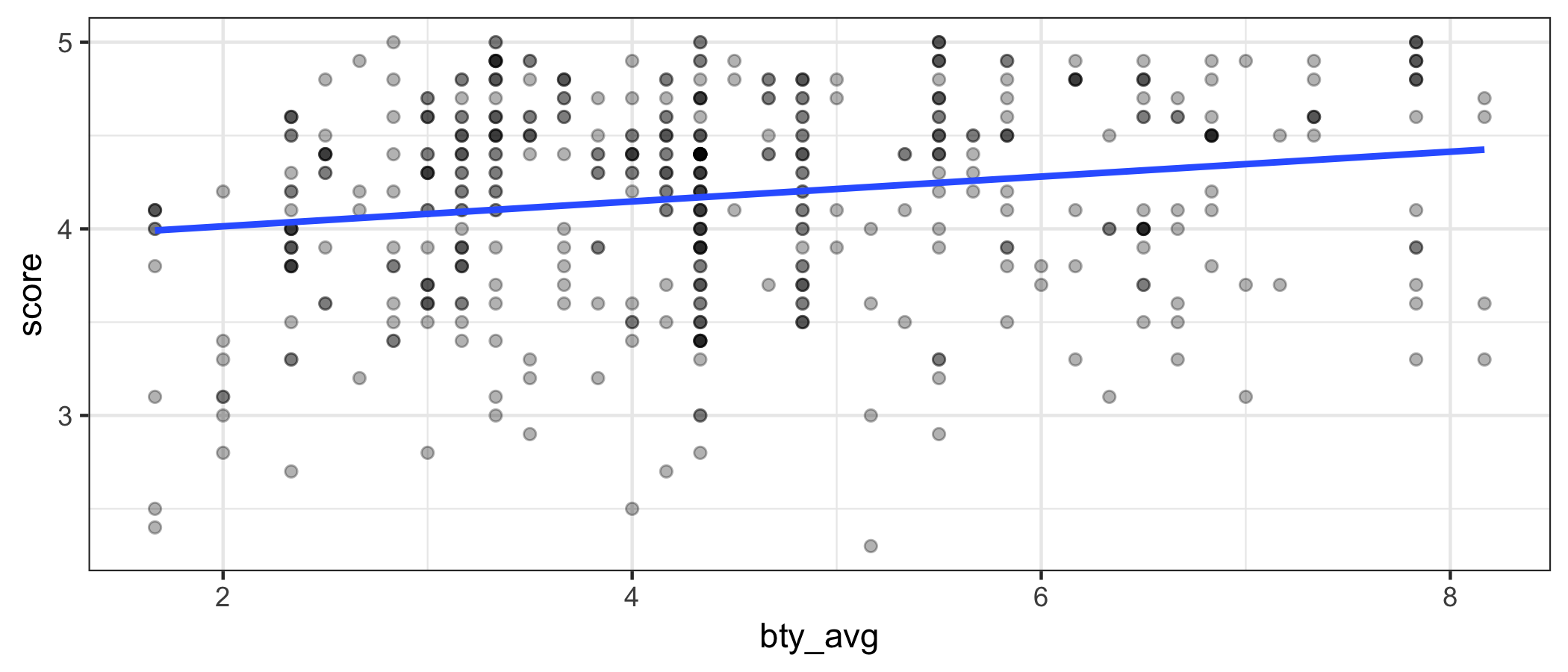

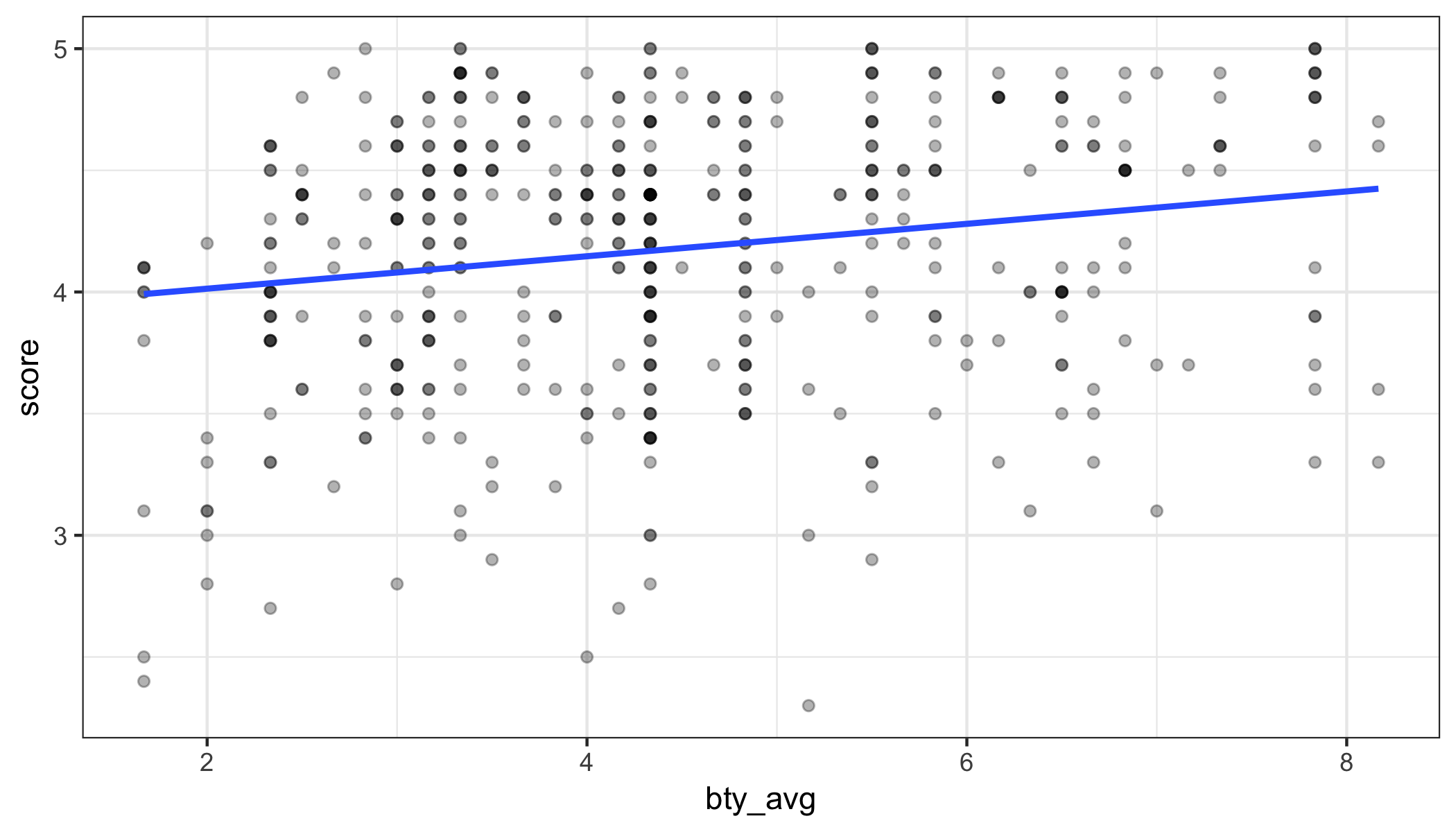

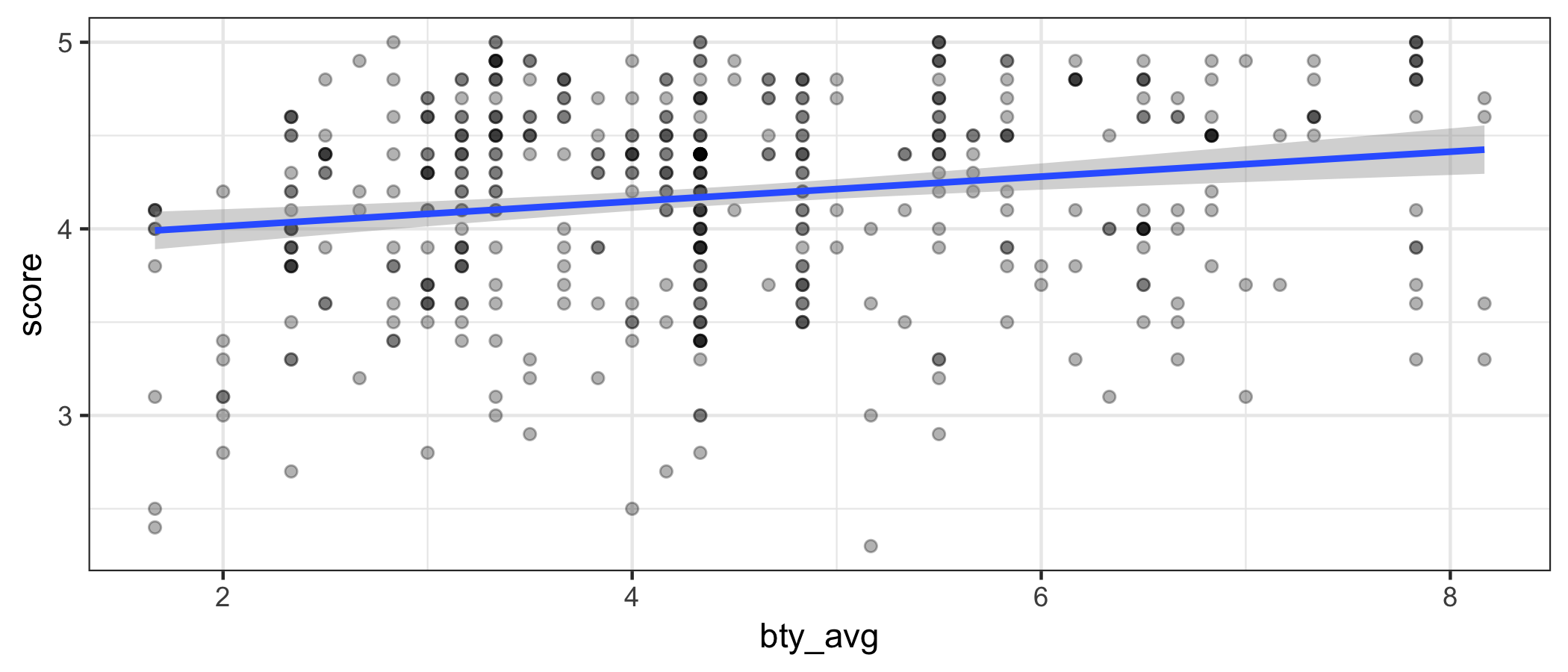

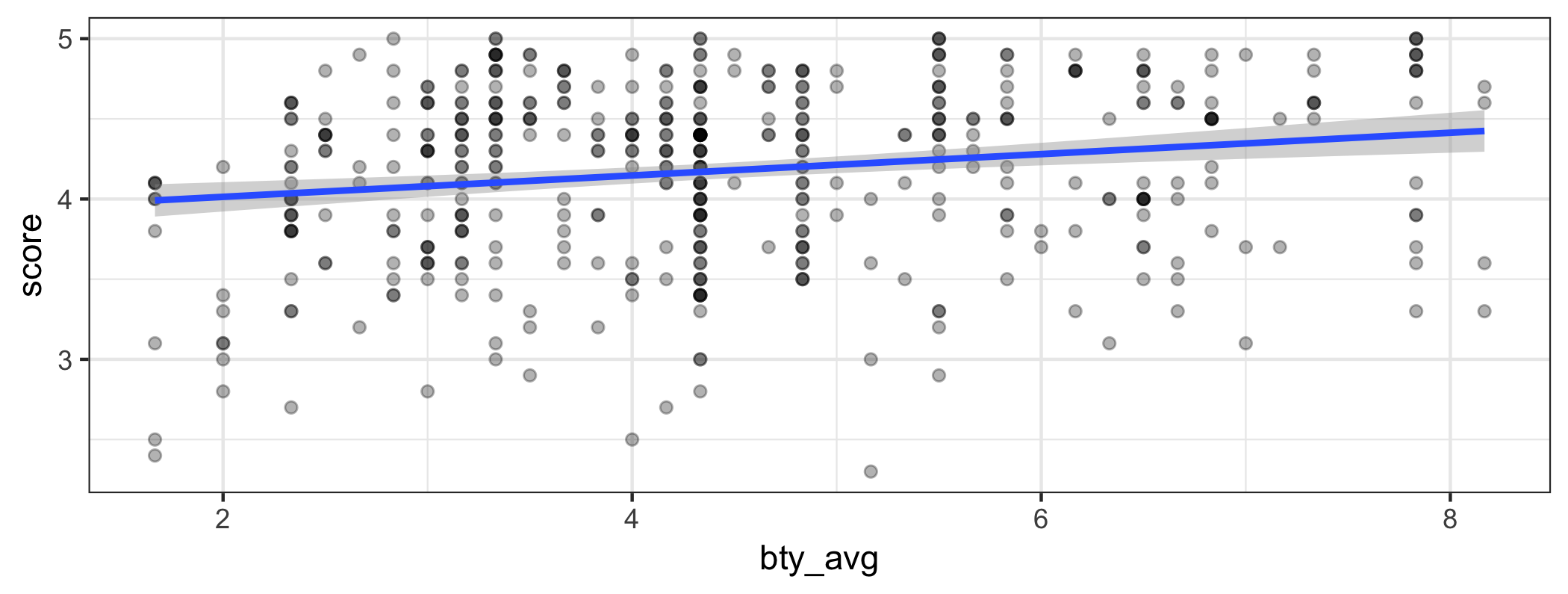

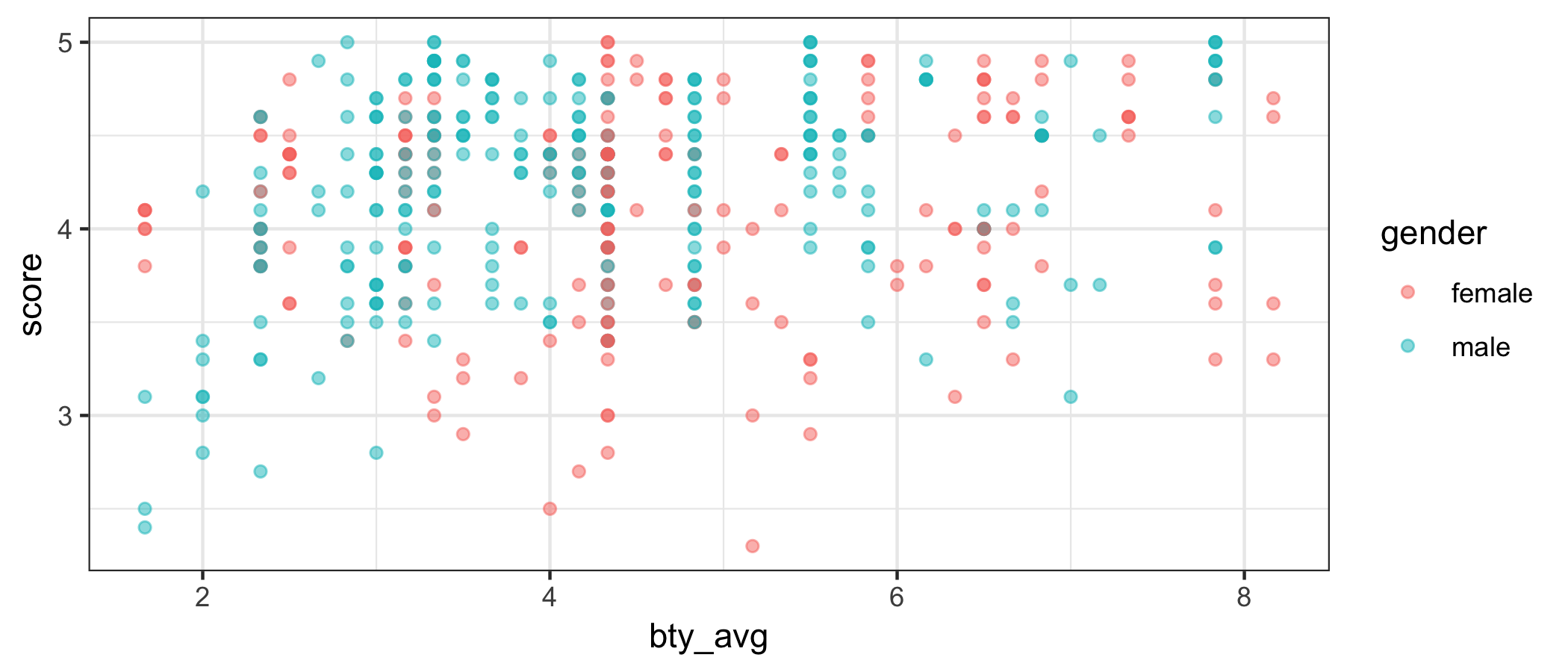

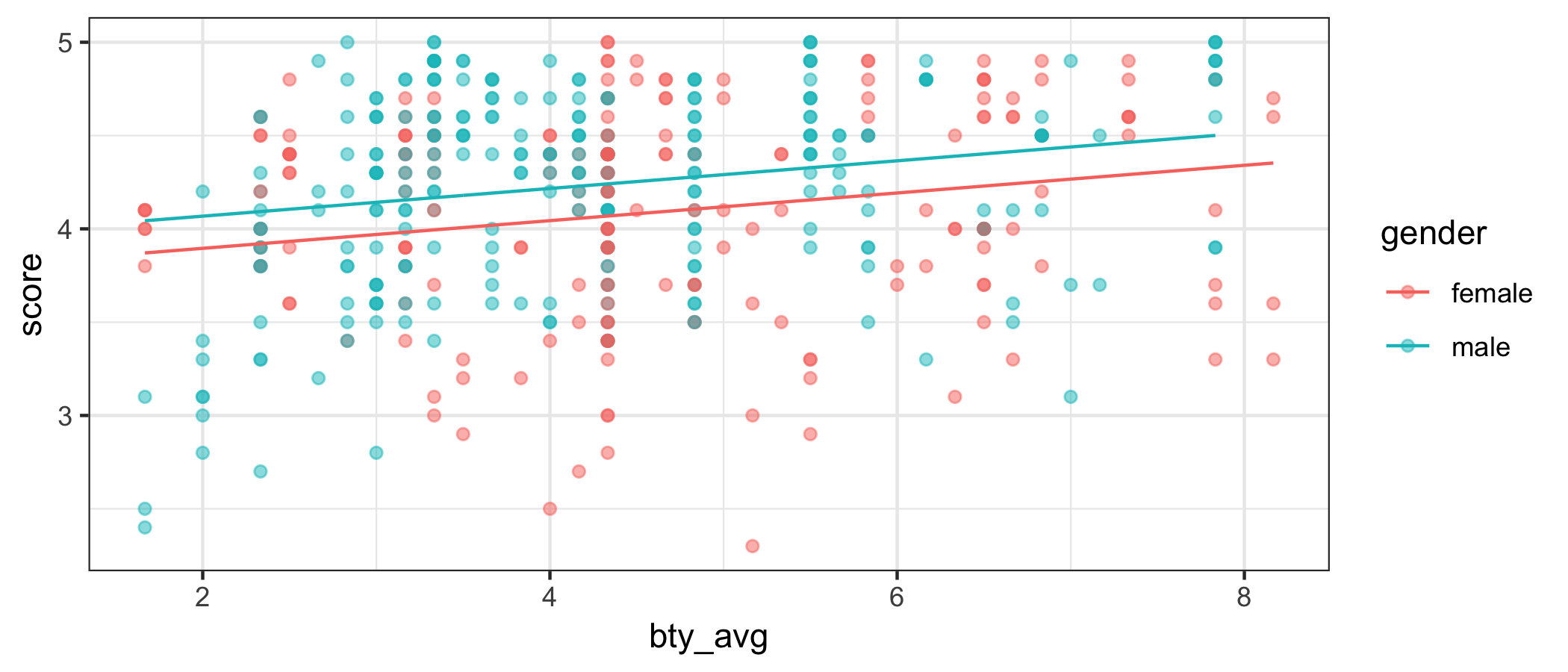

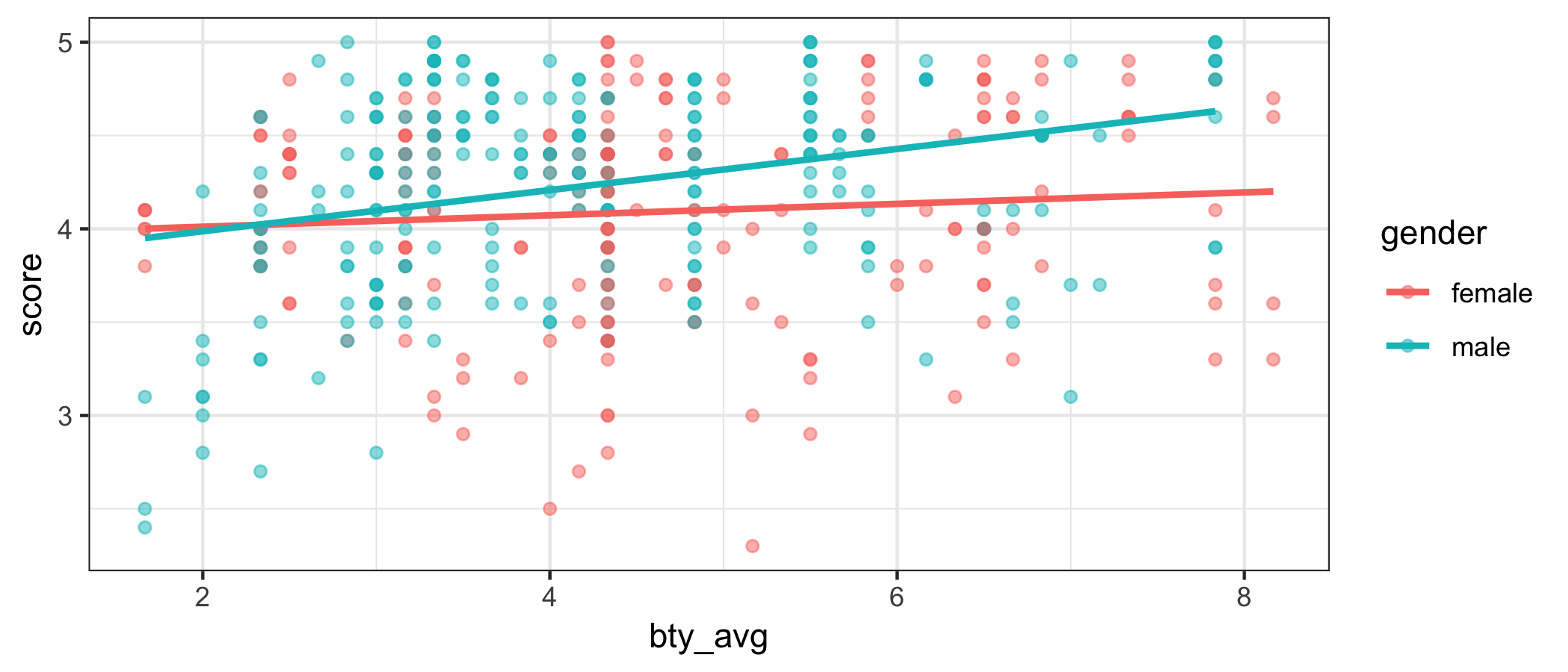

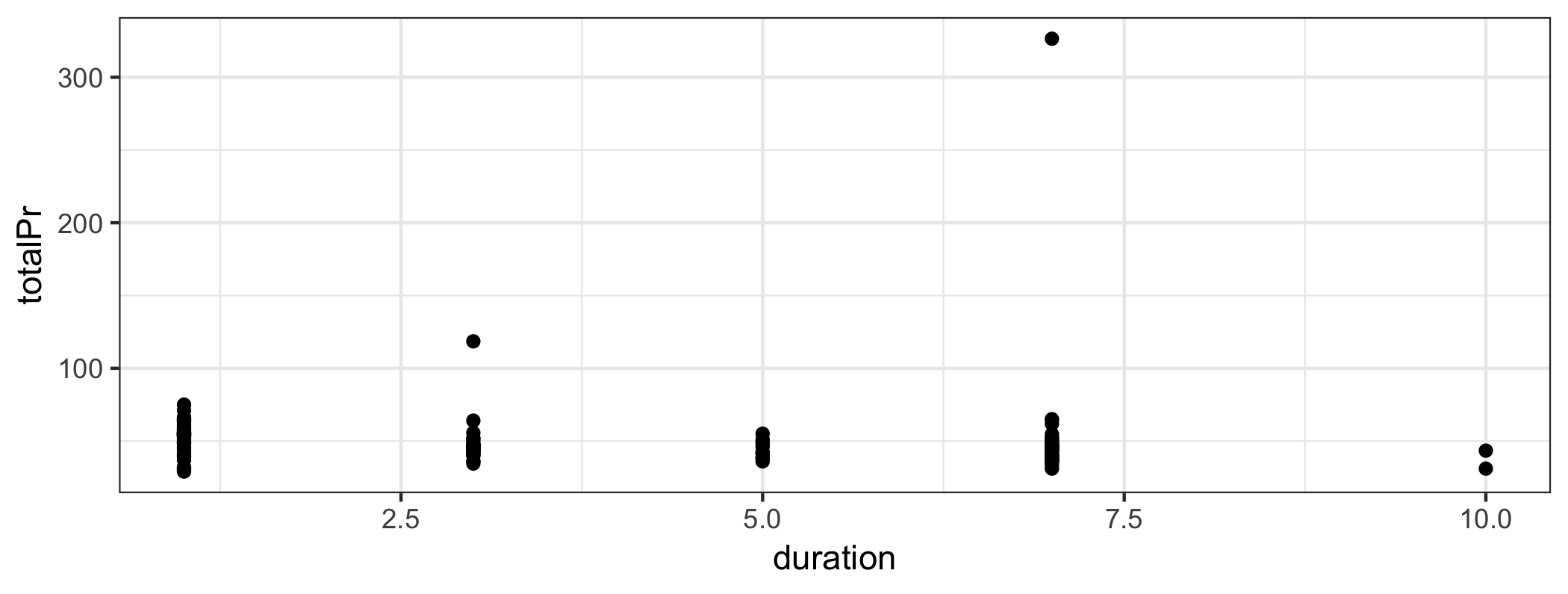

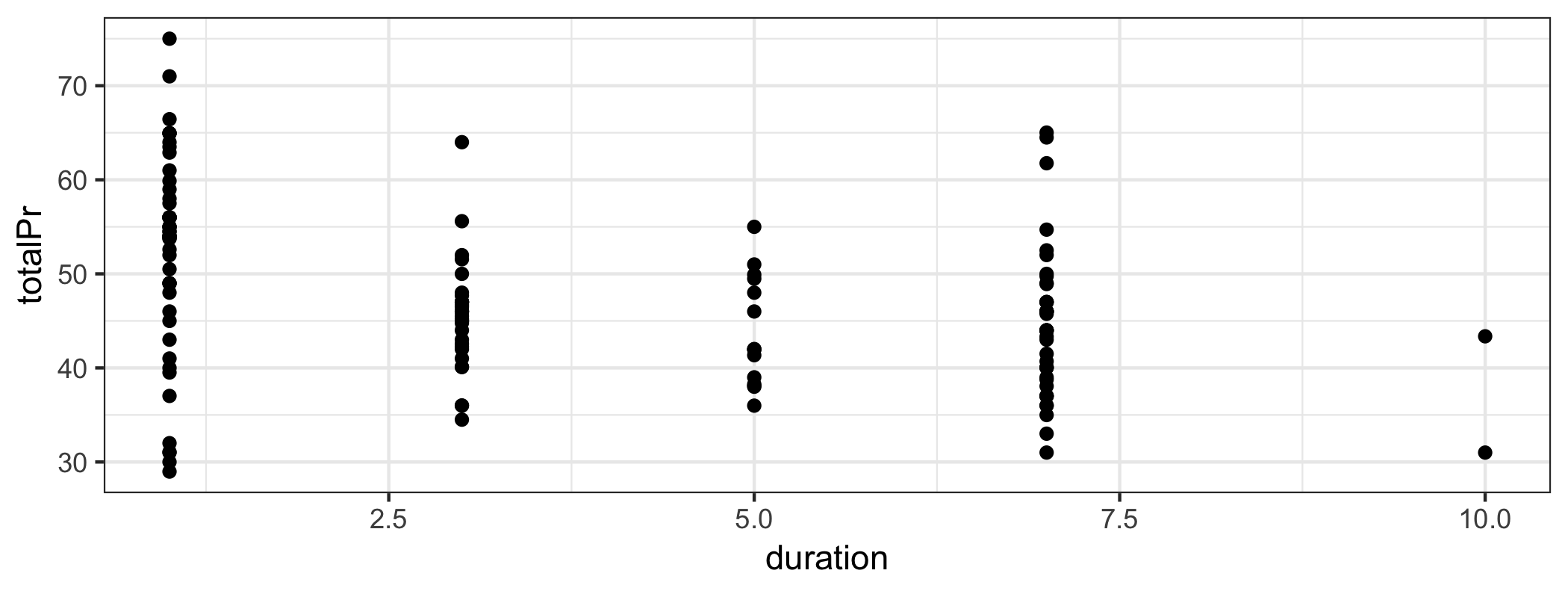

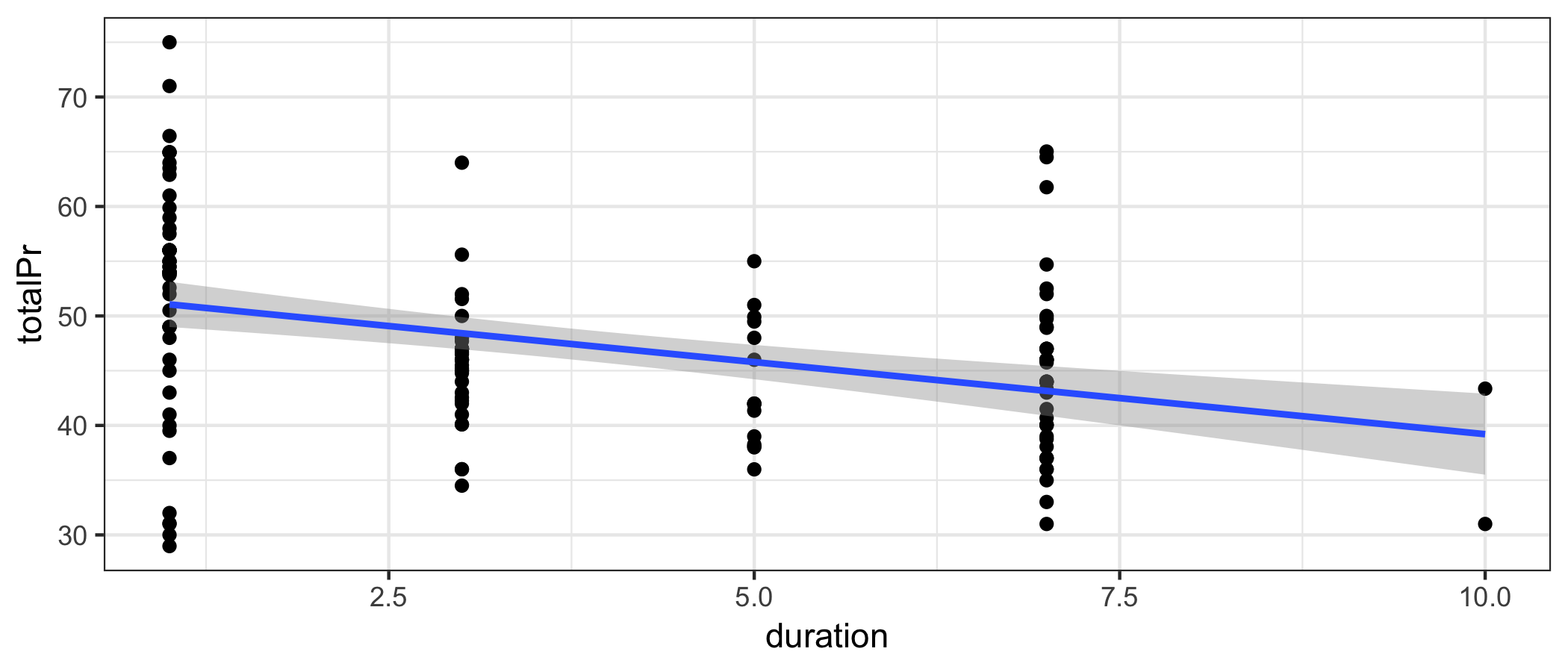

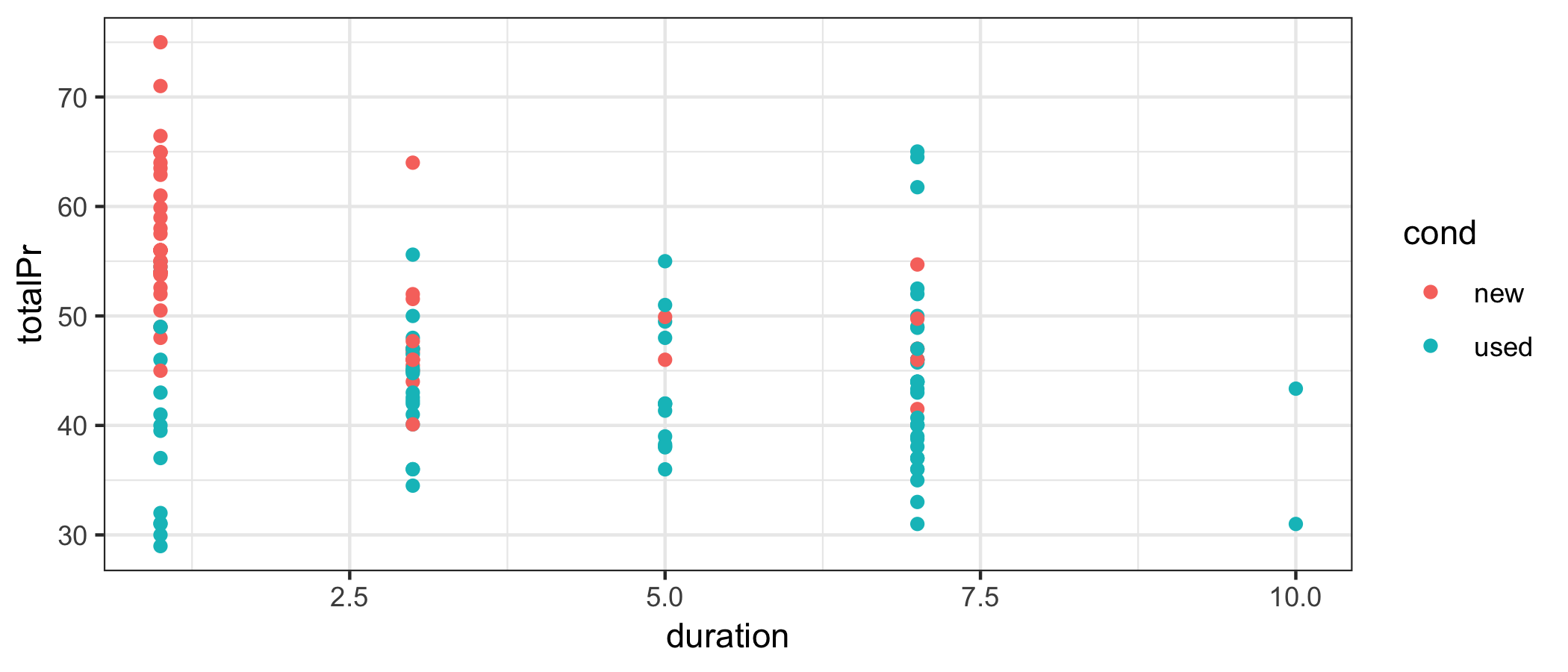

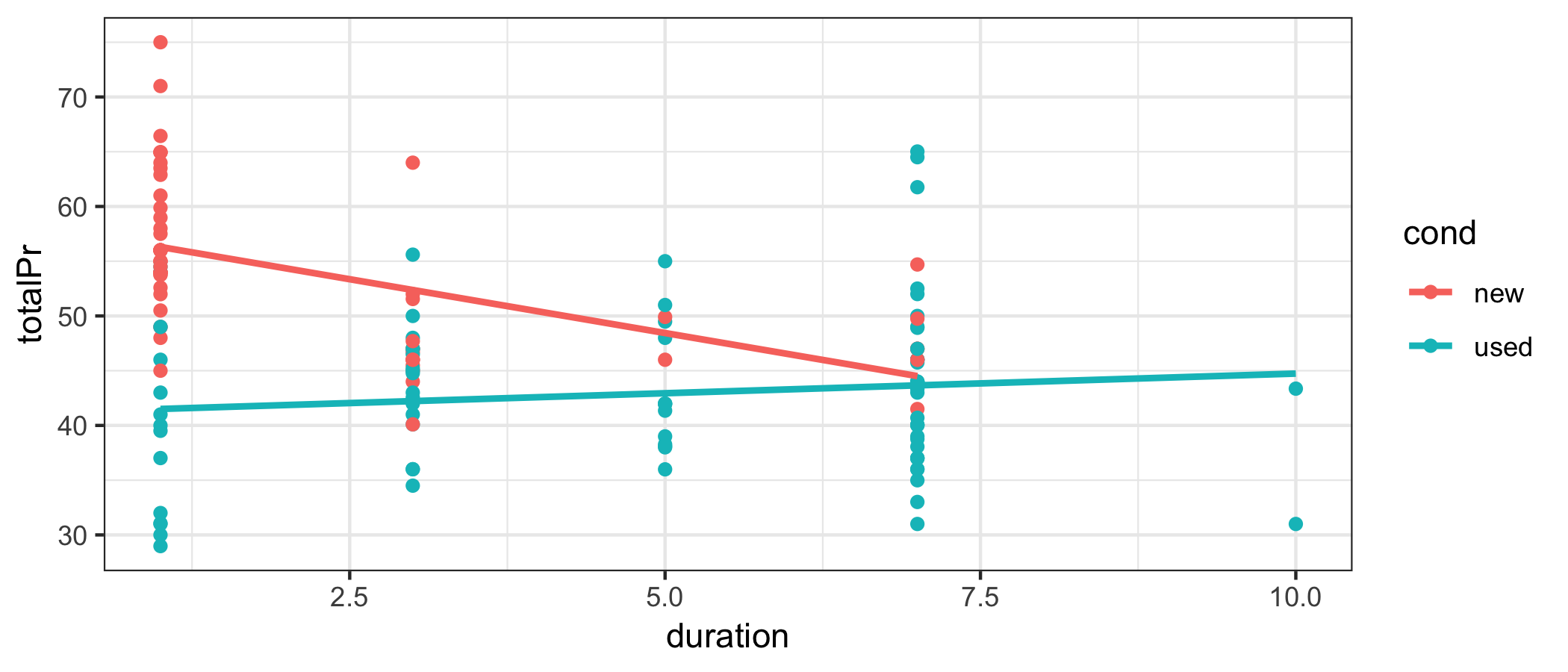

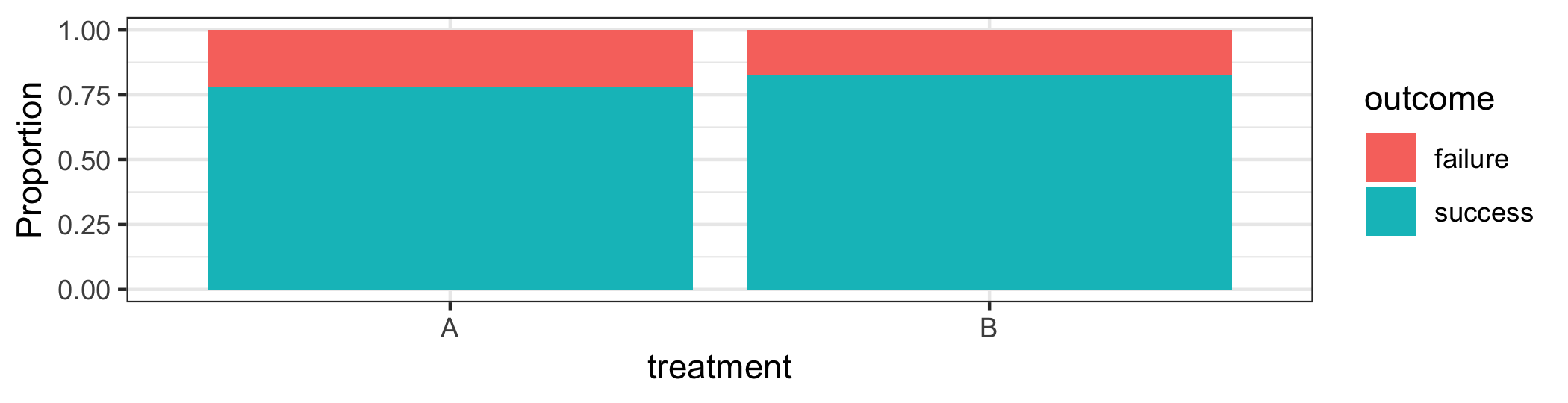

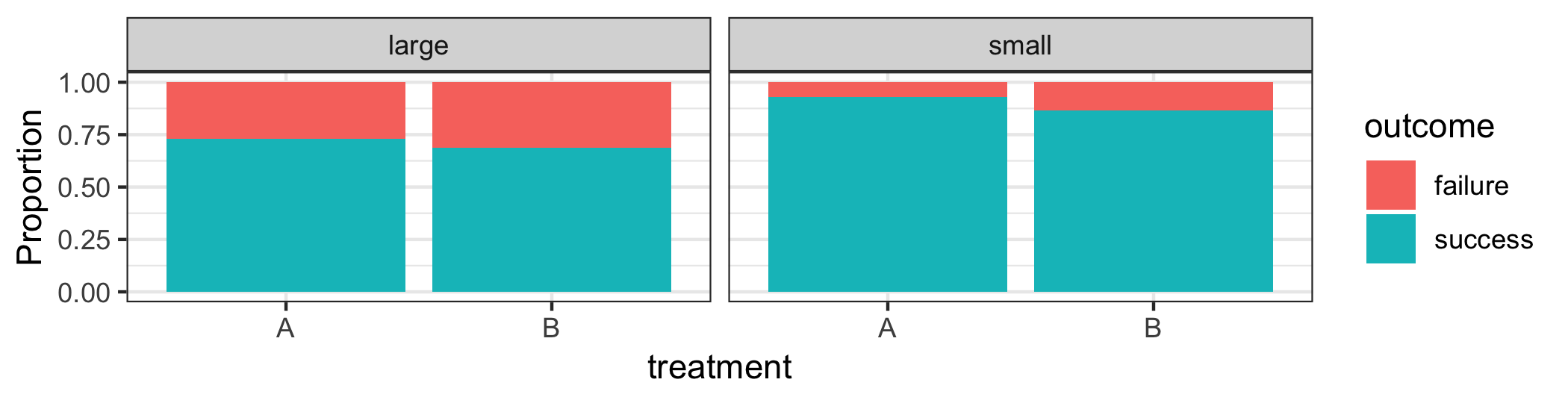

class: center, middle, inverse, title-slide # STA130H1F ## Class #11 ### Prof. Nathan Taback ### 2018-11-26 --- ## Today's Class - Inference for regression parameters - Regression when the independent variable is a categorical variable - Is the regression line the same for two groups? - An example of a variable affecting a relationship in a non-regression setting - Confounding --- ## Inference for regression parameters What affects course evaluations? ... other than the quality of the course ... * Data from course evaluations for a random sample of courses at the University of Texas at Austin. * Each observation corresponds to a course. * `score` is the average student evaluation for the course. * `bty_avg` is the average beauty rating of the professor, based on ratings of physical appear from 6 students in the course. --- ```r glimpse(evals) ``` ``` ## Observations: 463 ## Variables: 21 ## $ score <dbl> 4.7, 4.1, 3.9, 4.8, 4.6, 4.3, 2.8, 4.1, 3.4, 4.5... ## $ rank <fct> tenure track, tenure track, tenure track, tenure... ## $ ethnicity <fct> minority, minority, minority, minority, not mino... ## $ gender <fct> female, female, female, female, male, male, male... ## $ language <fct> english, english, english, english, english, eng... ## $ age <int> 36, 36, 36, 36, 59, 59, 59, 51, 51, 40, 40, 40, ... ## $ cls_perc_eval <dbl> 55.81395, 68.80000, 60.80000, 62.60163, 85.00000... ## $ cls_did_eval <int> 24, 86, 76, 77, 17, 35, 39, 55, 111, 40, 24, 24,... ## $ cls_students <int> 43, 125, 125, 123, 20, 40, 44, 55, 195, 46, 27, ... ## $ cls_level <fct> upper, upper, upper, upper, upper, upper, upper,... ## $ cls_profs <fct> single, single, single, single, multiple, multip... ## $ cls_credits <fct> multi credit, multi credit, multi credit, multi ... ## $ bty_f1lower <int> 5, 5, 5, 5, 4, 4, 4, 5, 5, 2, 2, 2, 2, 2, 2, 2, ... ## $ bty_f1upper <int> 7, 7, 7, 7, 4, 4, 4, 2, 2, 5, 5, 5, 5, 5, 5, 5, ... ## $ bty_f2upper <int> 6, 6, 6, 6, 2, 2, 2, 5, 5, 4, 4, 4, 4, 4, 4, 4, ... ## $ bty_m1lower <int> 2, 2, 2, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 3, ... ## $ bty_m1upper <int> 4, 4, 4, 4, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, ... ## $ bty_m2upper <int> 6, 6, 6, 6, 3, 3, 3, 3, 3, 2, 2, 2, 2, 2, 2, 2, ... ## $ bty_avg <dbl> 5.000, 5.000, 5.000, 5.000, 3.000, 3.000, 3.000,... ## $ pic_outfit <fct> not formal, not formal, not formal, not formal, ... ## $ pic_color <fct> color, color, color, color, color, color, color,... ``` --- ##Relationship between `score` and `bty_avg`? ```r ggplot(evals, aes(x=bty_avg, y=score)) + geom_point() + theme_bw() ``` <!-- --> --- Use some transparency so we can see where there are overlapping points ```r ggplot(evals, aes(x=bty_avg, y=score)) + geom_point(alpha=0.3) + theme_bw() ``` <!-- --> --- Is there a relationship between `score` and `bty_avg`? ```r ggplot(evals, aes(x = bty_avg, y = score)) + geom_point(alpha = 0.3) + theme_bw() + geom_smooth(method = "lm", fill = NA) ``` <!-- --> --- What would the slope be if there was no relationship? <br> <br> <br> <!-- --> --- ## Confidence interval for the slope .pull-left[ - The grey shaded area around the fitted regression line is a 95% confidence interval for the slope. ```r ggplot(evals, aes(x = bty_avg, y = score)) + geom_point(alpha = 0.3) + theme_bw() + geom_smooth(method = "lm") ``` <!-- --> ] .pull-right[ - The width of the confidence interval varies with the independent variable `bty_avg`. - The confidence interval is wider at the extremes; the regression is estimated most precisely near the mean of the independent variable. - The confidence interval for the slope shown is calculated based on a probability model, but can also be calculated using the bootstrap. ] --- <!-- --> *Does the confidence interval indicate that 0 is a possible value for `\(\beta_1\)` (the parameter for the slope)?* --- ## Inference for regression part 2: <br>Hypothesis test for the slope .small[ - Output from the summary command for the estimated regression coefficients gives results for an hypothesis test with hypotheses: `$$H_0: \beta_1 = 0 \mbox{ versus } H_a: \beta_1 \ne 0$$` ```r summary(lm(score ~ bty_avg, data = evals))$coefficients ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 3.88033795 0.07614297 50.961212 1.561043e-191 ## bty_avg 0.06663704 0.01629115 4.090382 5.082731e-05 ``` - The estimate of the slope is 0.06664. - The `lm()` function, by default, calculates the P-value for regression coeffcients based on a probability model that assumes all observations are *independent* and that the error terms have a *symmetric, bell-shaped distribution*. - The P-value is `\(5.08 \times 10^{-5} = 0.0000508\)` - *Does the hypothesis test for the slope indicate that the slope is different from 0?* ] --- ## What other factors might affect course evaluations? <img src="highered_genderbias.png" alt="Drawing" style="float: center; width: 50%;"/> --- # Regression when the independent variable is a categorical variable --- ## Relationship between `score` and `gender`? .small[ ```r ggplot(evals, aes(x = gender, y = score)) + geom_point(alpha = 1/5) + theme_bw() ``` <!-- --> ```r evals %>% group_by(gender) %>% summarise(n = n(), mean = mean(score)) ``` ``` ## # A tibble: 2 x 3 ## gender n mean ## <fct> <int> <dbl> ## 1 female 195 4.09 ## 2 male 268 4.23 ``` ] --- ## Regression with `gender` as the independent variable ```r lm(score ~ gender, data=evals)$coefficients ``` ``` ## (Intercept) gendermale ## 4.0928205 0.1415078 ``` $$ \widehat{score} = 4.09 + 0.14 \, male $$ Interpretation: On average, course evaluation scores for male professors are `\(0.14\)` higher than for female professors. --- ## Regression with `gender` as the independent variable $$ \widehat{score} = 4.09 + 0.14 \, male $$ - In regression, `R` encodes categorical independent variables as **indicator variables** (also called **dummy variables**). - `R` picks a baseline value of the categorical variable. Here the baseline level is `female`. - The indicator variable `male` is 1 for observations for which `gender` is male and 0 otherwise. - For females, `$$\widehat{score} = 4.09$$` - For males, `$$\widehat{score} = 4.09 + 0.14 = 4.23$$` --- *Could the difference between the mean score for males and females just be due to chance?* The regression model is `$$score_i = \beta_0 + \beta_1 \, male_i + \epsilon_i, i = 1, \ldots, 463$$` where, `$$male_i = \begin{cases} 1 & \mbox{if } i^{th} gender\mbox{ is } male\\ 0 & \mbox{if } i^{th} gender\mbox{ is } female. \end{cases}$$` We can answer the question with an hypothesis test with hypotheses `$$H_0: \beta_1 = 0 \mbox{ versus } H_a: \beta_1 \ne 0$$` --- ```r summary(lm(score ~ gender, data=evals))$coefficients ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 4.0928205 0.03866539 105.852305 0.000000000 ## gendermale 0.1415078 0.05082127 2.784422 0.005582967 ``` What conclusion do we make? --- # Is the regression line the same for two groups? --- ##Is the relationship between `score` and `bty_avg` the same for male and female professors? ```r ggplot(evals, aes(x = bty_avg, y = score, colour = gender)) + geom_point(alpha = 0.5) + theme_bw() ``` <!-- --> --- **Model 1:** `$$score_i = \beta_0 + \beta_1 \, male_i + \beta_2 \, bty\_avg_i + \epsilon_i, i=1,\ldots, 463$$` Model 1 for male professors: `$$score_i = \beta_0 + \beta_1 + \beta_2 \, bty\_avg_i + \epsilon_i, i=1,\ldots, 463$$` Model 1 for female professors: `$$score_i = \beta_0 + \beta_2 \, bty\_avg_i + \epsilon_i, i=1,\ldots, 463$$` --- ##Fitted parallel lines ```r parallel_lines <- lm(score ~ gender + bty_avg, data=evals) parallel_lines$coefficients ``` ``` ## (Intercept) gendermale bty_avg ## 3.74733824 0.17238955 0.07415537 ``` --- ##Plotting the parallel lines The `augment` function (in the library `broom`) creates a data frame with predicted values (`.fitted`), residuals, etc. for linear model output. ```r library(broom) augment(parallel_lines) ``` ``` ## score gender bty_avg .fitted .se.fit .resid .hat ## 1 4.7 female 5.000 4.118115 0.03826383 0.581884899 0.005238155 ## 2 4.1 female 5.000 4.118115 0.03826383 -0.018115101 0.005238155 ## 3 3.9 female 5.000 4.118115 0.03826383 -0.218115101 0.005238155 ## 4 4.8 female 5.000 4.118115 0.03826383 0.681884899 0.005238155 ## 5 4.6 male 3.000 4.142194 0.03808791 0.457806096 0.005190100 ## 6 4.3 male 3.000 4.142194 0.03808791 0.157806096 0.005190100 ## 7 2.8 male 3.000 4.142194 0.03808791 -1.342193904 0.005190100 ## 8 4.1 male 3.333 4.166888 0.03551641 -0.066887644 0.004512941 ## 9 3.4 male 3.333 4.166888 0.03551641 -0.766887644 0.004512941 ## 10 4.5 female 3.167 3.982188 0.04495870 0.517811698 0.007231509 ## 11 3.8 female 3.167 3.982188 0.04495870 -0.182188302 0.007231509 ## 12 4.5 female 3.167 3.982188 0.04495870 0.517811698 0.007231509 ## 13 4.6 female 3.167 3.982188 0.04495870 0.617811698 0.007231509 ## 14 3.9 female 3.167 3.982188 0.04495870 -0.082188302 0.007231509 ## 15 3.9 female 3.167 3.982188 0.04495870 -0.082188302 0.007231509 ## 16 4.3 female 3.167 3.982188 0.04495870 0.317811698 0.007231509 ## 17 4.5 female 3.167 3.982188 0.04495870 0.517811698 0.007231509 ## 18 4.8 female 7.333 4.291120 0.05763815 0.508880414 0.011885613 ## 19 4.6 female 7.333 4.291120 0.05763815 0.308880414 0.011885613 ## 20 4.6 female 7.333 4.291120 0.05763815 0.308880414 0.011885613 ## 21 4.9 female 7.333 4.291120 0.05763815 0.608880414 0.011885613 ## 22 4.6 female 7.333 4.291120 0.05763815 0.308880414 0.011885613 ## 23 4.5 female 7.333 4.291120 0.05763815 0.208880414 0.011885613 ## 24 4.4 male 5.500 4.327582 0.03821855 0.072417663 0.005225766 ## 25 4.6 male 5.500 4.327582 0.03821855 0.272417663 0.005225766 ## 26 4.7 male 5.500 4.327582 0.03821855 0.372417663 0.005225766 ## 27 4.5 male 5.500 4.327582 0.03821855 0.172417663 0.005225766 ## 28 4.8 male 5.500 4.327582 0.03821855 0.472417663 0.005225766 ## 29 4.9 male 5.500 4.327582 0.03821855 0.572417663 0.005225766 ## 30 4.5 male 5.500 4.327582 0.03821855 0.172417663 0.005225766 ## 31 4.4 female 4.167 4.056344 0.03869495 0.343656325 0.005356856 ## 32 4.3 female 4.167 4.056344 0.03869495 0.243656325 0.005356856 ## 33 4.1 female 4.167 4.056344 0.03869495 0.043656325 0.005356856 ## 34 4.2 female 4.167 4.056344 0.03869495 0.143656325 0.005356856 ## 35 3.5 female 4.167 4.056344 0.03869495 -0.556343675 0.005356856 ## 36 3.4 female 4.000 4.043960 0.03934540 -0.643959728 0.005538466 ## 37 4.5 female 4.000 4.043960 0.03934540 0.456040272 0.005538466 ## 38 4.4 female 4.000 4.043960 0.03934540 0.356040272 0.005538466 ## 39 4.4 female 4.000 4.043960 0.03934540 0.356040272 0.005538466 ## 40 2.5 female 4.000 4.043960 0.03934540 -1.543959728 0.005538466 ## 41 4.3 female 4.000 4.043960 0.03934540 0.256040272 0.005538466 ## 42 4.5 female 4.000 4.043960 0.03934540 0.456040272 0.005538466 ## 43 4.8 female 4.667 4.093421 0.03786035 0.706578639 0.005128267 ## 44 4.8 female 4.667 4.093421 0.03786035 0.706578639 0.005128267 ## 45 4.4 female 4.667 4.093421 0.03786035 0.306578639 0.005128267 ## 46 4.7 female 4.667 4.093421 0.03786035 0.606578639 0.005128267 ## 47 4.4 female 4.667 4.093421 0.03786035 0.306578639 0.005128267 ## 48 4.7 female 4.667 4.093421 0.03786035 0.606578639 0.005128267 ## 49 4.5 female 4.667 4.093421 0.03786035 0.406578639 0.005128267 ## 50 4.0 male 5.500 4.327582 0.03821855 -0.327582337 0.005225766 ## 51 4.3 male 5.500 4.327582 0.03821855 -0.027582337 0.005225766 ## 52 4.4 male 5.500 4.327582 0.03821855 0.072417663 0.005225766 ## 53 4.5 male 5.500 4.327582 0.03821855 0.172417663 0.005225766 ## 54 5.0 male 5.500 4.327582 0.03821855 0.672417663 0.005225766 ## 55 4.9 male 5.500 4.327582 0.03821855 0.572417663 0.005225766 ## 56 4.6 male 5.500 4.327582 0.03821855 0.272417663 0.005225766 ## 57 5.0 male 5.500 4.327582 0.03821855 0.672417663 0.005225766 ## 58 4.7 male 5.500 4.327582 0.03821855 0.372417663 0.005225766 ## 59 5.0 male 5.500 4.327582 0.03821855 0.672417663 0.005225766 ## 60 3.6 male 4.833 4.278121 0.03369075 -0.678120703 0.004060904 ## 61 3.7 male 4.833 4.278121 0.03369075 -0.578120703 0.004060904 ## 62 4.3 male 4.833 4.278121 0.03369075 0.021879297 0.004060904 ## 63 4.1 male 4.333 4.241043 0.03232826 -0.141043017 0.003739091 ## 64 4.2 male 4.333 4.241043 0.03232826 -0.041043017 0.003739091 ## 65 4.7 male 4.333 4.241043 0.03232826 0.458956983 0.003739091 ## 66 4.7 male 4.333 4.241043 0.03232826 0.458956983 0.003739091 ## 67 3.5 male 4.333 4.241043 0.03232826 -0.741043017 0.003739091 ## 68 4.1 male 4.833 4.278121 0.03369075 -0.178120703 0.004060904 ## 69 4.2 male 4.833 4.278121 0.03369075 -0.078120703 0.004060904 ## 70 4.0 male 4.833 4.278121 0.03369075 -0.278120703 0.004060904 ## 71 4.0 male 4.833 4.278121 0.03369075 -0.278120703 0.004060904 ## 72 3.9 male 4.833 4.278121 0.03369075 -0.378120703 0.004060904 ## 73 4.4 male 4.833 4.278121 0.03369075 0.121879297 0.004060904 ## 74 3.8 male 4.833 4.278121 0.03369075 -0.478120703 0.004060904 ## 75 3.5 male 4.000 4.216349 0.03253424 -0.716349277 0.003786892 ## 76 4.2 male 4.000 4.216349 0.03253424 -0.016349277 0.003786892 ## 77 3.5 male 4.000 4.216349 0.03253424 -0.716349277 0.003786892 ## 78 3.6 male 4.000 4.216349 0.03253424 -0.616349277 0.003786892 ## 79 2.9 female 5.500 4.155193 0.04025233 -1.255192787 0.005796737 ## 80 3.3 female 5.500 4.155193 0.04025233 -0.855192787 0.005796737 ## 81 3.3 female 5.500 4.155193 0.04025233 -0.855192787 0.005796737 ## 82 3.2 female 5.500 4.155193 0.04025233 -0.955192787 0.005796737 ## 83 4.6 male 4.167 4.228733 0.03231802 0.371266775 0.003736723 ## 84 4.2 male 4.167 4.228733 0.03231802 -0.028733225 0.003736723 ## 85 4.3 male 4.167 4.228733 0.03231802 0.071266775 0.003736723 ## 86 4.4 male 4.167 4.228733 0.03231802 0.171266775 0.003736723 ## 87 4.1 male 4.167 4.228733 0.03231802 -0.128733225 0.003736723 ## 88 4.6 male 4.167 4.228733 0.03231802 0.371266775 0.003736723 ## 89 4.4 female 2.500 3.932727 0.05161850 0.467273332 0.009532618 ## 90 4.8 female 2.500 3.932727 0.05161850 0.867273332 0.009532618 ## 91 4.3 female 2.500 3.932727 0.05161850 0.367273332 0.009532618 ## 92 3.6 female 2.500 3.932727 0.05161850 -0.332726668 0.009532618 ## 93 4.3 female 2.500 3.932727 0.05161850 0.367273332 0.009532618 ## 94 4.0 male 4.333 4.241043 0.03232826 -0.241043017 0.003739091 ## 95 4.2 male 4.333 4.241043 0.03232826 -0.041043017 0.003739091 ## 96 4.1 male 4.333 4.241043 0.03232826 -0.141043017 0.003739091 ## 97 4.1 male 4.333 4.241043 0.03232826 -0.141043017 0.003739091 ## 98 4.4 male 4.333 4.241043 0.03232826 0.158956983 0.003739091 ## 99 4.3 male 4.333 4.241043 0.03232826 0.058956983 0.003739091 ## 100 4.4 male 4.333 4.241043 0.03232826 0.158956983 0.003739091 ## 101 4.4 male 4.333 4.241043 0.03232826 0.158956983 0.003739091 ## 102 4.9 female 4.333 4.068653 0.03822881 0.831346533 0.005228571 ## 103 5.0 female 4.333 4.068653 0.03822881 0.931346533 0.005228571 ## 104 4.4 female 4.333 4.068653 0.03822881 0.331346533 0.005228571 ## 105 4.8 female 4.333 4.068653 0.03822881 0.731346533 0.005228571 ## 106 4.9 female 4.333 4.068653 0.03822881 0.831346533 0.005228571 ## 107 4.3 female 4.333 4.068653 0.03822881 0.231346533 0.005228571 ## 108 5.0 female 4.333 4.068653 0.03822881 0.931346533 0.005228571 ## 109 4.7 female 4.333 4.068653 0.03822881 0.631346533 0.005228571 ## 110 4.5 female 4.333 4.068653 0.03822881 0.431346533 0.005228571 ## 111 3.5 female 4.333 4.068653 0.03822881 -0.568653467 0.005228571 ## 112 3.9 female 4.333 4.068653 0.03822881 -0.168653467 0.005228571 ## 113 4.0 female 4.333 4.068653 0.03822881 -0.068653467 0.005228571 ## 114 4.0 female 4.333 4.068653 0.03822881 -0.068653467 0.005228571 ## 115 3.7 female 4.333 4.068653 0.03822881 -0.368653467 0.005228571 ## 116 3.4 female 4.333 4.068653 0.03822881 -0.668653467 0.005228571 ## 117 3.3 female 4.333 4.068653 0.03822881 -0.768653467 0.005228571 ## 118 3.8 female 4.333 4.068653 0.03822881 -0.268653467 0.005228571 ## 119 3.9 female 4.333 4.068653 0.03822881 -0.168653467 0.005228571 ## 120 3.4 female 4.333 4.068653 0.03822881 -0.668653467 0.005228571 ## 121 3.7 female 4.833 4.105731 0.03796571 -0.405731153 0.005156849 ## 122 4.1 female 4.833 4.105731 0.03796571 -0.005731153 0.005156849 ## 123 3.7 female 4.833 4.105731 0.03796571 -0.405731153 0.005156849 ## 124 3.5 female 4.833 4.105731 0.03796571 -0.605731153 0.005156849 ## 125 3.5 female 4.833 4.105731 0.03796571 -0.605731153 0.005156849 ## 126 4.4 female 4.833 4.105731 0.03796571 0.294268847 0.005156849 ## 127 3.4 female 2.833 3.957420 0.04810386 -0.557420407 0.008278685 ## 128 4.3 male 3.000 4.142194 0.03808791 0.157806096 0.005190100 ## 129 3.7 male 3.000 4.142194 0.03808791 -0.442193904 0.005190100 ## 130 4.7 male 3.000 4.142194 0.03808791 0.557806096 0.005190100 ## 131 3.9 male 3.000 4.142194 0.03808791 -0.242193904 0.005190100 ## 132 3.6 male 3.000 4.142194 0.03808791 -0.542193904 0.005190100 ## 133 4.5 male 4.167 4.228733 0.03231802 0.271266775 0.003736723 ## 134 4.5 male 4.167 4.228733 0.03231802 0.271266775 0.003736723 ## 135 4.8 male 4.167 4.228733 0.03231802 0.571266775 0.003736723 ## 136 4.8 male 4.167 4.228733 0.03231802 0.571266775 0.003736723 ## 137 4.7 male 4.167 4.228733 0.03231802 0.471266775 0.003736723 ## 138 4.5 male 4.167 4.228733 0.03231802 0.271266775 0.003736723 ## 139 4.3 male 4.167 4.228733 0.03231802 0.071266775 0.003736723 ## 140 4.8 female 7.833 4.328197 0.06398835 0.471802728 0.014648840 ## 141 4.1 female 7.833 4.328197 0.06398835 -0.228197272 0.014648840 ## 142 4.4 male 3.833 4.203965 0.03297321 0.196034670 0.003889770 ## 143 4.3 male 3.833 4.203965 0.03297321 0.096034670 0.003889770 ## 144 3.6 male 3.833 4.203965 0.03297321 -0.603965330 0.003889770 ## 145 4.5 male 3.833 4.203965 0.03297321 0.296034670 0.003889770 ## 146 4.3 male 3.833 4.203965 0.03297321 0.096034670 0.003889770 ## 147 4.4 male 4.833 4.278121 0.03369075 0.121879297 0.004060904 ## 148 4.7 male 4.833 4.278121 0.03369075 0.421879297 0.004060904 ## 149 4.8 male 4.833 4.278121 0.03369075 0.521879297 0.004060904 ## 150 3.5 male 4.833 4.278121 0.03369075 -0.778120703 0.004060904 ## 151 3.8 male 4.833 4.278121 0.03369075 -0.478120703 0.004060904 ## 152 3.6 male 4.833 4.278121 0.03369075 -0.678120703 0.004060904 ## 153 4.2 male 4.833 4.278121 0.03369075 -0.078120703 0.004060904 ## 154 3.6 male 3.000 4.142194 0.03808791 -0.542193904 0.005190100 ## 155 4.4 male 3.000 4.142194 0.03808791 0.257806096 0.005190100 ## 156 3.7 male 3.000 4.142194 0.03808791 -0.442193904 0.005190100 ## 157 4.3 male 3.000 4.142194 0.03808791 0.157806096 0.005190100 ## 158 4.6 male 3.000 4.142194 0.03808791 0.457806096 0.005190100 ## 159 4.6 male 3.000 4.142194 0.03808791 0.457806096 0.005190100 ## 160 4.1 male 3.000 4.142194 0.03808791 -0.042193904 0.005190100 ## 161 3.6 male 3.000 4.142194 0.03808791 -0.542193904 0.005190100 ## 162 2.3 female 5.167 4.130499 0.03875022 -1.830499048 0.005372170 ## 163 4.3 male 4.333 4.241043 0.03232826 0.058956983 0.003739091 ## 164 4.4 male 4.333 4.241043 0.03232826 0.158956983 0.003739091 ## 165 3.6 male 4.333 4.241043 0.03232826 -0.641043017 0.003739091 ## 166 4.4 male 4.333 4.241043 0.03232826 0.158956983 0.003739091 ## 167 3.9 male 4.333 4.241043 0.03232826 -0.341043017 0.003739091 ## 168 3.8 male 4.333 4.241043 0.03232826 -0.441043017 0.003739091 ## 169 3.4 male 4.333 4.241043 0.03232826 -0.841043017 0.003739091 ## 170 4.9 male 2.667 4.117500 0.04121336 0.782499835 0.006076836 ## 171 4.1 male 2.667 4.117500 0.04121336 -0.017500165 0.006076836 ## 172 3.2 male 2.667 4.117500 0.04121336 -0.917500165 0.006076836 ## 173 4.2 male 5.500 4.327582 0.03821855 -0.127582337 0.005225766 ## 174 3.9 male 5.500 4.327582 0.03821855 -0.427582337 0.005225766 ## 175 4.9 male 5.500 4.327582 0.03821855 0.572417663 0.005225766 ## 176 4.7 male 5.500 4.327582 0.03821855 0.372417663 0.005225766 ## 177 4.4 male 5.500 4.327582 0.03821855 0.072417663 0.005225766 ## 178 4.2 female 4.333 4.068653 0.03822881 0.131346533 0.005228571 ## 179 4.0 female 4.333 4.068653 0.03822881 -0.068653467 0.005228571 ## 180 4.4 female 4.333 4.068653 0.03822881 0.331346533 0.005228571 ## 181 3.9 female 4.333 4.068653 0.03822881 -0.168653467 0.005228571 ## 182 4.4 female 4.333 4.068653 0.03822881 0.331346533 0.005228571 ## 183 3.0 female 4.333 4.068653 0.03822881 -1.068653467 0.005228571 ## 184 3.5 female 4.333 4.068653 0.03822881 -0.568653467 0.005228571 ## 185 2.8 female 4.333 4.068653 0.03822881 -1.268653467 0.005228571 ## 186 4.6 female 4.333 4.068653 0.03822881 0.531346533 0.005228571 ## 187 4.3 female 4.333 4.068653 0.03822881 0.231346533 0.005228571 ## 188 3.4 female 4.333 4.068653 0.03822881 -0.668653467 0.005228571 ## 189 3.0 female 4.333 4.068653 0.03822881 -1.068653467 0.005228571 ## 190 4.2 female 4.333 4.068653 0.03822881 0.131346533 0.005228571 ## 191 4.3 male 2.333 4.092732 0.04478818 0.207267729 0.007176756 ## 192 4.1 male 2.333 4.092732 0.04478818 0.007267729 0.007176756 ## 193 4.6 male 2.333 4.092732 0.04478818 0.507267729 0.007176756 ## 194 3.9 female 6.500 4.229348 0.04825670 -0.329348160 0.008331375 ## 195 3.5 female 6.500 4.229348 0.04825670 -0.729348160 0.008331375 ## 196 4.0 female 6.500 4.229348 0.04825670 -0.229348160 0.008331375 ## 197 4.0 female 6.500 4.229348 0.04825670 -0.229348160 0.008331375 ## 198 3.9 male 2.333 4.092732 0.04478818 -0.192732271 0.007176756 ## 199 3.3 male 2.333 4.092732 0.04478818 -0.792732271 0.007176756 ## 200 4.0 male 2.333 4.092732 0.04478818 -0.092732271 0.007176756 ## 201 3.8 male 2.333 4.092732 0.04478818 -0.292732271 0.007176756 ## 202 4.2 male 2.333 4.092732 0.04478818 0.107267729 0.007176756 ## 203 4.0 male 2.333 4.092732 0.04478818 -0.092732271 0.007176756 ## 204 3.8 male 2.333 4.092732 0.04478818 -0.292732271 0.007176756 ## 205 3.3 male 2.333 4.092732 0.04478818 -0.792732271 0.007176756 ## 206 4.1 male 3.000 4.142194 0.03808791 -0.042193904 0.005190100 ## 207 4.7 male 3.000 4.142194 0.03808791 0.557806096 0.005190100 ## 208 4.4 male 3.000 4.142194 0.03808791 0.257806096 0.005190100 ## 209 4.8 male 3.667 4.191656 0.03362167 0.608344462 0.004044269 ## 210 4.8 male 3.667 4.191656 0.03362167 0.608344462 0.004044269 ## 211 4.6 male 3.667 4.191656 0.03362167 0.408344462 0.004044269 ## 212 4.6 male 3.667 4.191656 0.03362167 0.408344462 0.004044269 ## 213 4.8 male 3.667 4.191656 0.03362167 0.608344462 0.004044269 ## 214 4.4 male 3.667 4.191656 0.03362167 0.208344462 0.004044269 ## 215 4.7 male 3.667 4.191656 0.03362167 0.508344462 0.004044269 ## 216 4.7 male 3.667 4.191656 0.03362167 0.508344462 0.004044269 ## 217 3.3 male 6.167 4.377044 0.04495853 -1.077043971 0.007231454 ## 218 4.4 male 4.000 4.216349 0.03253424 0.183650723 0.003786892 ## 219 4.3 male 4.000 4.216349 0.03253424 0.083650723 0.003786892 ## 220 4.9 male 4.000 4.216349 0.03253424 0.683650723 0.003786892 ## 221 4.4 male 4.000 4.216349 0.03253424 0.183650723 0.003786892 ## 222 4.7 male 4.000 4.216349 0.03253424 0.483650723 0.003786892 ## 223 4.3 male 4.833 4.278121 0.03369075 0.021879297 0.004060904 ## 224 4.8 male 4.833 4.278121 0.03369075 0.521879297 0.004060904 ## 225 4.5 male 4.833 4.278121 0.03369075 0.221879297 0.004060904 ## 226 4.7 male 4.833 4.278121 0.03369075 0.421879297 0.004060904 ## 227 3.3 female 8.167 4.352965 0.06843987 -1.052965167 0.016757907 ## 228 4.7 female 8.167 4.352965 0.06843987 0.347034833 0.016757907 ## 229 4.6 female 8.167 4.352965 0.06843987 0.247034833 0.016757907 ## 230 3.6 female 8.167 4.352965 0.06843987 -0.752965167 0.016757907 ## 231 4.0 male 6.500 4.401738 0.04887853 -0.401737710 0.008547473 ## 232 4.1 male 6.500 4.401738 0.04887853 -0.301737710 0.008547473 ## 233 4.0 male 6.500 4.401738 0.04887853 -0.401737710 0.008547473 ## 234 4.5 male 4.833 4.278121 0.03369075 0.221879297 0.004060904 ## 235 4.6 male 4.833 4.278121 0.03369075 0.321879297 0.004060904 ## 236 4.8 male 4.833 4.278121 0.03369075 0.521879297 0.004060904 ## 237 4.6 male 4.833 4.278121 0.03369075 0.321879297 0.004060904 ## 238 4.9 male 7.000 4.438815 0.05523981 0.461184604 0.010917064 ## 239 3.1 male 7.000 4.438815 0.05523981 -1.338815396 0.010917064 ## 240 3.7 male 7.000 4.438815 0.05523981 -0.738815396 0.010917064 ## 241 3.7 female 4.667 4.093421 0.03786035 -0.393421361 0.005128267 ## 242 3.9 female 3.833 4.031576 0.04016909 -0.131575780 0.005772784 ## 243 3.9 female 3.833 4.031576 0.04016909 -0.131575780 0.005772784 ## 244 3.2 female 3.833 4.031576 0.04016909 -0.831575780 0.005772784 ## 245 4.4 female 3.167 3.982188 0.04495870 0.417811698 0.007231509 ## 246 4.2 female 3.167 3.982188 0.04495870 0.217811698 0.007231509 ## 247 4.7 female 3.167 3.982188 0.04495870 0.717811698 0.007231509 ## 248 3.9 female 3.167 3.982188 0.04495870 -0.082188302 0.007231509 ## 249 3.6 female 3.167 3.982188 0.04495870 -0.382188302 0.007231509 ## 250 3.4 female 3.167 3.982188 0.04495870 -0.582188302 0.007231509 ## 251 4.4 female 3.167 3.982188 0.04495870 0.417811698 0.007231509 ## 252 4.4 male 3.167 4.154578 0.03672119 0.245422148 0.004824307 ## 253 4.1 male 3.167 4.154578 0.03672119 -0.054577852 0.004824307 ## 254 3.6 male 3.167 4.154578 0.03672119 -0.554577852 0.004824307 ## 255 3.5 male 3.167 4.154578 0.03672119 -0.654577852 0.004824307 ## 256 4.1 male 3.167 4.154578 0.03672119 -0.054577852 0.004824307 ## 257 3.8 male 3.167 4.154578 0.03672119 -0.354577852 0.004824307 ## 258 4.0 male 3.167 4.154578 0.03672119 -0.154577852 0.004824307 ## 259 4.8 male 3.167 4.154578 0.03672119 0.645422148 0.004824307 ## 260 4.2 male 3.167 4.154578 0.03672119 0.045422148 0.004824307 ## 261 4.6 male 3.167 4.154578 0.03672119 0.445422148 0.004824307 ## 262 4.3 male 3.167 4.154578 0.03672119 0.145422148 0.004824307 ## 263 4.8 male 3.167 4.154578 0.03672119 0.645422148 0.004824307 ## 264 3.8 male 3.167 4.154578 0.03672119 -0.354577852 0.004824307 ## 265 4.5 female 5.833 4.179887 0.04239694 0.320113474 0.006430880 ## 266 4.9 female 5.833 4.179887 0.04239694 0.720113474 0.006430880 ## 267 4.9 female 5.833 4.179887 0.04239694 0.720113474 0.006430880 ## 268 4.8 female 5.833 4.179887 0.04239694 0.620113474 0.006430880 ## 269 4.7 female 5.833 4.179887 0.04239694 0.520113474 0.006430880 ## 270 4.6 female 5.833 4.179887 0.04239694 0.420113474 0.006430880 ## 271 4.3 male 5.667 4.339966 0.03973620 -0.039966284 0.005649034 ## 272 4.4 male 5.667 4.339966 0.03973620 0.060033716 0.005649034 ## 273 4.5 male 5.667 4.339966 0.03973620 0.160033716 0.005649034 ## 274 4.2 male 5.667 4.339966 0.03973620 -0.139966284 0.005649034 ## 275 4.8 female 6.500 4.229348 0.04825670 0.570651840 0.008331375 ## 276 4.6 female 6.500 4.229348 0.04825670 0.370651840 0.008331375 ## 277 4.9 female 6.500 4.229348 0.04825670 0.670651840 0.008331375 ## 278 4.8 female 6.500 4.229348 0.04825670 0.570651840 0.008331375 ## 279 4.8 female 6.500 4.229348 0.04825670 0.570651840 0.008331375 ## 280 4.6 female 6.500 4.229348 0.04825670 0.370651840 0.008331375 ## 281 4.7 female 6.500 4.229348 0.04825670 0.470651840 0.008331375 ## 282 4.1 female 1.667 3.870955 0.06162598 0.229044757 0.013587174 ## 283 3.8 female 1.667 3.870955 0.06162598 -0.070955243 0.013587174 ## 284 4.0 female 1.667 3.870955 0.06162598 0.129044757 0.013587174 ## 285 4.1 female 1.667 3.870955 0.06162598 0.229044757 0.013587174 ## 286 4.0 female 1.667 3.870955 0.06162598 0.129044757 0.013587174 ## 287 4.1 female 1.667 3.870955 0.06162598 0.229044757 0.013587174 ## 288 3.5 male 6.667 4.414122 0.05094741 -0.914121657 0.009286365 ## 289 4.1 male 6.667 4.414122 0.05094741 -0.314121657 0.009286365 ## 290 3.6 male 6.667 4.414122 0.05094741 -0.814121657 0.009286365 ## 291 4.0 male 3.667 4.191656 0.03362167 -0.191655538 0.004044269 ## 292 3.9 male 3.667 4.191656 0.03362167 -0.291655538 0.004044269 ## 293 3.8 male 3.667 4.191656 0.03362167 -0.391655538 0.004044269 ## 294 4.4 male 3.833 4.203965 0.03297321 0.196034670 0.003889770 ## 295 4.7 male 3.833 4.203965 0.03297321 0.496034670 0.003889770 ## 296 3.8 female 6.167 4.204654 0.04510128 -0.404654421 0.007277449 ## 297 4.1 female 6.167 4.204654 0.04510128 -0.104654421 0.007277449 ## 298 4.1 female 3.333 3.994498 0.04356300 0.105501906 0.006789489 ## 299 4.7 female 3.333 3.994498 0.04356300 0.705501906 0.006789489 ## 300 4.3 female 3.333 3.994498 0.04356300 0.305501906 0.006789489 ## 301 4.4 female 3.333 3.994498 0.04356300 0.405501906 0.006789489 ## 302 4.5 female 3.333 3.994498 0.04356300 0.505501906 0.006789489 ## 303 3.1 female 3.333 3.994498 0.04356300 -0.894498094 0.006789489 ## 304 3.7 female 3.333 3.994498 0.04356300 -0.294498094 0.006789489 ## 305 4.5 female 3.333 3.994498 0.04356300 0.505501906 0.006789489 ## 306 3.0 female 3.333 3.994498 0.04356300 -0.994498094 0.006789489 ## 307 4.6 female 3.333 3.994498 0.04356300 0.605501906 0.006789489 ## 308 3.7 male 3.667 4.191656 0.03362167 -0.491655538 0.004044269 ## 309 3.6 male 3.667 4.191656 0.03362167 -0.591655538 0.004044269 ## 310 3.2 female 3.500 4.006882 0.04228629 -0.806882041 0.006397358 ## 311 3.3 female 3.500 4.006882 0.04228629 -0.706882041 0.006397358 ## 312 2.9 female 3.500 4.006882 0.04228629 -1.106882041 0.006397358 ## 313 4.2 male 2.667 4.117500 0.04121336 0.082499835 0.006076836 ## 314 4.5 male 5.667 4.339966 0.03973620 0.160033716 0.005649034 ## 315 3.8 female 6.000 4.192270 0.04368577 -0.392270474 0.006827810 ## 316 3.7 female 6.000 4.192270 0.04368577 -0.492270474 0.006827810 ## 317 3.7 female 6.500 4.229348 0.04825670 -0.529348160 0.008331375 ## 318 4.0 female 6.500 4.229348 0.04825670 -0.229348160 0.008331375 ## 319 3.7 female 6.500 4.229348 0.04825670 -0.529348160 0.008331375 ## 320 4.5 female 2.333 3.920343 0.05350042 0.579657279 0.010240373 ## 321 3.8 female 2.333 3.920343 0.05350042 -0.120342721 0.010240373 ## 322 3.9 female 2.333 3.920343 0.05350042 -0.020342721 0.010240373 ## 323 4.6 female 2.333 3.920343 0.05350042 0.679657279 0.010240373 ## 324 4.5 female 2.333 3.920343 0.05350042 0.579657279 0.010240373 ## 325 4.2 female 2.333 3.920343 0.05350042 0.279657279 0.010240373 ## 326 4.0 female 2.333 3.920343 0.05350042 0.079657279 0.010240373 ## 327 3.8 male 2.333 4.092732 0.04478818 -0.292732271 0.007176756 ## 328 3.5 male 2.333 4.092732 0.04478818 -0.592732271 0.007176756 ## 329 2.7 male 2.333 4.092732 0.04478818 -1.392732271 0.007176756 ## 330 4.0 male 2.333 4.092732 0.04478818 -0.092732271 0.007176756 ## 331 4.6 male 2.333 4.092732 0.04478818 0.507267729 0.007176756 ## 332 3.9 male 2.333 4.092732 0.04478818 -0.192732271 0.007176756 ## 333 4.5 male 7.167 4.451199 0.05746369 0.048800656 0.011813768 ## 334 3.7 male 7.167 4.451199 0.05746369 -0.751199344 0.011813768 ## 335 2.4 male 1.667 4.043345 0.05286711 -1.643344792 0.009999369 ## 336 3.1 male 1.667 4.043345 0.05286711 -0.943344792 0.009999369 ## 337 2.5 male 1.667 4.043345 0.05286711 -1.543344792 0.009999369 ## 338 3.0 female 5.167 4.130499 0.03875022 -1.130499048 0.005372170 ## 339 4.5 male 3.500 4.179272 0.03447535 0.320728409 0.004252250 ## 340 4.8 male 3.500 4.179272 0.03447535 0.620728409 0.004252250 ## 341 4.9 male 3.500 4.179272 0.03447535 0.720728409 0.004252250 ## 342 4.5 male 3.500 4.179272 0.03447535 0.320728409 0.004252250 ## 343 4.6 male 3.500 4.179272 0.03447535 0.420728409 0.004252250 ## 344 4.5 male 3.500 4.179272 0.03447535 0.320728409 0.004252250 ## 345 4.9 male 3.500 4.179272 0.03447535 0.720728409 0.004252250 ## 346 4.4 male 3.500 4.179272 0.03447535 0.220728409 0.004252250 ## 347 4.6 male 3.500 4.179272 0.03447535 0.420728409 0.004252250 ## 348 4.6 male 3.333 4.166888 0.03551641 0.433112356 0.004512941 ## 349 5.0 male 3.333 4.166888 0.03551641 0.833112356 0.004512941 ## 350 4.9 male 3.333 4.166888 0.03551641 0.733112356 0.004512941 ## 351 4.6 male 3.333 4.166888 0.03551641 0.433112356 0.004512941 ## 352 4.8 male 3.333 4.166888 0.03551641 0.633112356 0.004512941 ## 353 4.9 male 3.333 4.166888 0.03551641 0.733112356 0.004512941 ## 354 4.9 male 3.333 4.166888 0.03551641 0.733112356 0.004512941 ## 355 4.9 male 3.333 4.166888 0.03551641 0.733112356 0.004512941 ## 356 5.0 male 3.333 4.166888 0.03551641 0.833112356 0.004512941 ## 357 4.5 male 3.333 4.166888 0.03551641 0.333112356 0.004512941 ## 358 3.5 male 5.833 4.352276 0.04136624 -0.852276076 0.006122003 ## 359 3.8 male 5.833 4.352276 0.04136624 -0.552276076 0.006122003 ## 360 3.9 male 5.833 4.352276 0.04136624 -0.452276076 0.006122003 ## 361 3.9 male 5.833 4.352276 0.04136624 -0.452276076 0.006122003 ## 362 4.2 male 5.833 4.352276 0.04136624 -0.152276076 0.006122003 ## 363 4.1 male 5.833 4.352276 0.04136624 -0.252276076 0.006122003 ## 364 4.8 male 6.167 4.377044 0.04495853 0.422956029 0.007231454 ## 365 4.8 male 6.167 4.377044 0.04495853 0.422956029 0.007231454 ## 366 4.8 male 6.167 4.377044 0.04495853 0.422956029 0.007231454 ## 367 4.8 male 6.167 4.377044 0.04495853 0.422956029 0.007231454 ## 368 4.9 male 6.167 4.377044 0.04495853 0.522956029 0.007231454 ## 369 4.2 male 3.333 4.166888 0.03551641 0.033112356 0.004512941 ## 370 4.5 male 3.333 4.166888 0.03551641 0.333112356 0.004512941 ## 371 3.9 male 3.333 4.166888 0.03551641 -0.266887644 0.004512941 ## 372 4.4 male 3.333 4.166888 0.03551641 0.233112356 0.004512941 ## 373 4.0 female 5.167 4.130499 0.03875022 -0.130499048 0.005372170 ## 374 3.6 female 5.167 4.130499 0.03875022 -0.530499048 0.005372170 ## 375 3.7 female 4.167 4.056344 0.03869495 -0.356343675 0.005356856 ## 376 2.7 female 4.167 4.056344 0.03869495 -1.356343675 0.005356856 ## 377 4.5 female 2.500 3.932727 0.05161850 0.567273332 0.009532618 ## 378 4.4 female 2.500 3.932727 0.05161850 0.467273332 0.009532618 ## 379 3.9 female 2.500 3.932727 0.05161850 -0.032726668 0.009532618 ## 380 3.6 female 2.500 3.932727 0.05161850 -0.332726668 0.009532618 ## 381 4.4 female 2.500 3.932727 0.05161850 0.467273332 0.009532618 ## 382 4.4 female 2.500 3.932727 0.05161850 0.467273332 0.009532618 ## 383 4.7 male 4.333 4.241043 0.03232826 0.458956983 0.003739091 ## 384 4.5 male 4.333 4.241043 0.03232826 0.258956983 0.003739091 ## 385 4.1 male 4.333 4.241043 0.03232826 -0.141043017 0.003739091 ## 386 3.7 male 4.333 4.241043 0.03232826 -0.541043017 0.003739091 ## 387 4.3 male 3.000 4.142194 0.03808791 0.157806096 0.005190100 ## 388 3.5 male 3.000 4.142194 0.03808791 -0.642193904 0.005190100 ## 389 3.7 male 3.000 4.142194 0.03808791 -0.442193904 0.005190100 ## 390 4.0 female 6.333 4.216964 0.04662245 -0.216964213 0.007776633 ## 391 4.0 female 6.333 4.216964 0.04662245 -0.216964213 0.007776633 ## 392 3.1 female 6.333 4.216964 0.04662245 -1.116964213 0.007776633 ## 393 4.5 female 6.333 4.216964 0.04662245 0.283035787 0.007776633 ## 394 4.8 male 3.333 4.166888 0.03551641 0.633112356 0.004512941 ## 395 4.2 male 3.333 4.166888 0.03551641 0.033112356 0.004512941 ## 396 4.9 male 3.333 4.166888 0.03551641 0.733112356 0.004512941 ## 397 4.8 male 3.333 4.166888 0.03551641 0.633112356 0.004512941 ## 398 3.5 male 2.833 4.129810 0.03959375 -0.629809957 0.005608603 ## 399 3.6 male 2.833 4.129810 0.03959375 -0.529809957 0.005608603 ## 400 4.4 male 2.833 4.129810 0.03959375 0.270190043 0.005608603 ## 401 3.4 male 2.833 4.129810 0.03959375 -0.729809957 0.005608603 ## 402 3.9 male 2.833 4.129810 0.03959375 -0.229809957 0.005608603 ## 403 3.8 male 2.833 4.129810 0.03959375 -0.329809957 0.005608603 ## 404 4.8 male 2.833 4.129810 0.03959375 0.670190043 0.005608603 ## 405 4.6 male 2.833 4.129810 0.03959375 0.470190043 0.005608603 ## 406 5.0 male 2.833 4.129810 0.03959375 0.870190043 0.005608603 ## 407 3.8 male 2.833 4.129810 0.03959375 -0.329809957 0.005608603 ## 408 4.2 male 2.833 4.129810 0.03959375 0.070190043 0.005608603 ## 409 3.3 female 6.667 4.241732 0.04998498 -0.941732107 0.008938826 ## 410 4.7 female 6.667 4.241732 0.04998498 0.458267893 0.008938826 ## 411 4.6 female 6.667 4.241732 0.04998498 0.358267893 0.008938826 ## 412 4.6 female 6.667 4.241732 0.04998498 0.358267893 0.008938826 ## 413 4.0 female 6.667 4.241732 0.04998498 -0.241732107 0.008938826 ## 414 4.2 female 6.833 4.254042 0.05178679 -0.054041899 0.009594877 ## 415 4.9 female 6.833 4.254042 0.05178679 0.645958101 0.009594877 ## 416 4.5 female 6.833 4.254042 0.05178679 0.245958101 0.009594877 ## 417 4.8 female 6.833 4.254042 0.05178679 0.545958101 0.009594877 ## 418 3.8 female 6.833 4.254042 0.05178679 -0.454041899 0.009594877 ## 419 4.8 male 7.833 4.500587 0.06669455 0.299413178 0.015914101 ## 420 5.0 male 7.833 4.500587 0.06669455 0.499413178 0.015914101 ## 421 5.0 male 7.833 4.500587 0.06669455 0.499413178 0.015914101 ## 422 4.9 male 7.833 4.500587 0.06669455 0.399413178 0.015914101 ## 423 4.6 male 7.833 4.500587 0.06669455 0.099413178 0.015914101 ## 424 5.0 male 7.833 4.500587 0.06669455 0.499413178 0.015914101 ## 425 4.8 male 7.833 4.500587 0.06669455 0.299413178 0.015914101 ## 426 4.9 male 7.833 4.500587 0.06669455 0.399413178 0.015914101 ## 427 4.9 male 7.833 4.500587 0.06669455 0.399413178 0.015914101 ## 428 3.9 male 7.833 4.500587 0.06669455 -0.600586822 0.015914101 ## 429 3.9 male 7.833 4.500587 0.06669455 -0.600586822 0.015914101 ## 430 4.5 male 5.833 4.352276 0.04136624 0.147723924 0.006122003 ## 431 4.5 male 5.833 4.352276 0.04136624 0.147723924 0.006122003 ## 432 3.3 male 2.000 4.068039 0.04869463 -0.768038531 0.008483274 ## 433 3.1 male 2.000 4.068039 0.04869463 -0.968038531 0.008483274 ## 434 2.8 male 2.000 4.068039 0.04869463 -1.268038531 0.008483274 ## 435 3.1 male 2.000 4.068039 0.04869463 -0.968038531 0.008483274 ## 436 4.2 male 2.000 4.068039 0.04869463 0.131961469 0.008483274 ## 437 3.4 male 2.000 4.068039 0.04869463 -0.668038531 0.008483274 ## 438 3.0 male 2.000 4.068039 0.04869463 -1.068038531 0.008483274 ## 439 3.3 female 7.833 4.328197 0.06398835 -1.028197272 0.014648840 ## 440 3.6 female 7.833 4.328197 0.06398835 -0.728197272 0.014648840 ## 441 3.7 female 7.833 4.328197 0.06398835 -0.628197272 0.014648840 ## 442 3.6 male 3.333 4.166888 0.03551641 -0.566887644 0.004512941 ## 443 4.3 male 3.333 4.166888 0.03551641 0.133112356 0.004512941 ## 444 4.1 female 4.500 4.081037 0.03794809 0.018962586 0.005152064 ## 445 4.9 female 4.500 4.081037 0.03794809 0.818962586 0.005152064 ## 446 4.8 female 4.500 4.081037 0.03794809 0.718962586 0.005152064 ## 447 3.7 female 4.333 4.068653 0.03822881 -0.368653467 0.005228571 ## 448 3.9 female 4.333 4.068653 0.03822881 -0.168653467 0.005228571 ## 449 4.5 female 4.333 4.068653 0.03822881 0.431346533 0.005228571 ## 450 3.6 female 4.333 4.068653 0.03822881 -0.468653467 0.005228571 ## 451 4.4 female 4.333 4.068653 0.03822881 0.331346533 0.005228571 ## 452 3.4 female 4.333 4.068653 0.03822881 -0.668653467 0.005228571 ## 453 4.4 female 4.333 4.068653 0.03822881 0.331346533 0.005228571 ## 454 4.5 male 6.833 4.426431 0.05306158 0.073568551 0.010073069 ## 455 4.5 male 6.833 4.426431 0.05306158 0.073568551 0.010073069 ## 456 4.5 male 6.833 4.426431 0.05306158 0.073568551 0.010073069 ## 457 4.6 male 6.833 4.426431 0.05306158 0.173568551 0.010073069 ## 458 4.1 male 6.833 4.426431 0.05306158 -0.326431449 0.010073069 ## 459 4.5 male 6.833 4.426431 0.05306158 0.073568551 0.010073069 ## 460 3.5 female 5.333 4.142809 0.03941338 -0.642808840 0.005557619 ## 461 4.4 female 5.333 4.142809 0.03941338 0.257191160 0.005557619 ## 462 4.4 female 5.333 4.142809 0.03941338 0.257191160 0.005557619 ## 463 4.1 female 5.333 4.142809 0.03941338 -0.042808840 0.005557619 ## .sigma .cooksd .std.resid ## 1 0.5285624 2.137443e-03 1.10351503 ## 2 0.5292627 2.071580e-06 -0.03435436 ## 3 0.5291649 3.003252e-04 -0.41364416 ## 4 0.5283005 2.935232e-03 1.29315993 ## 5 0.5288296 1.310807e-03 0.86818494 ## 6 0.5292119 1.557483e-04 0.29926398 ## 7 0.5255230 1.126695e-02 -2.54534079 ## 8 0.5292541 2.429743e-05 -0.12680279 ## 9 0.5280460 3.193979e-03 -1.45383345 ## 10 0.5287072 2.346156e-03 0.98298885 ## 11 0.5291946 2.904385e-04 -0.34585752 ## 12 0.5287072 2.346156e-03 0.98298885 ## 13 0.5284715 3.339838e-03 1.17282404 ## 14 0.5292494 5.910622e-05 -0.15602232 ## 15 0.5292494 5.910622e-05 -0.15602232 ## 16 0.5290539 8.837978e-04 0.60331846 ## 17 0.5287072 2.346156e-03 0.98298885 ## 18 0.5287237 3.759402e-03 0.96830651 ## 19 0.5290646 1.385058e-03 0.58774303 ## 20 0.5290646 1.385058e-03 0.58774303 ## 21 0.5284906 5.382095e-03 1.15858824 ## 22 0.5290646 1.385058e-03 0.58774303 ## 23 0.5291725 6.334069e-04 0.39746129 ## 24 0.5292525 3.302708e-05 0.13733555 ## 25 0.5291098 4.673601e-04 0.51662298 ## 26 0.5289763 8.734572e-04 0.70626670 ## 27 0.5292019 1.872167e-04 0.32697927 ## 28 0.5288014 1.405508e-03 0.89591042 ## 29 0.5285850 2.063513e-03 1.08555414 ## 30 0.5292019 1.872167e-04 0.32697927 ## 31 0.5290189 7.626131e-04 0.65176558 ## 32 0.5291405 3.833637e-04 0.46210936 ## 33 0.5292594 1.230694e-05 0.08279693 ## 34 0.5292207 1.332616e-04 0.27245315 ## 35 0.5286225 1.998674e-03 -1.05514035 ## 36 0.5284044 2.769562e-03 -1.22142116 ## 37 0.5288328 1.388993e-03 0.86498769 ## 38 0.5290010 8.466266e-04 0.67531416 ## 39 0.5290010 8.466266e-04 0.67531416 ## 40 0.5243065 1.592083e-02 -2.92848294 ## 41 0.5291277 4.378348e-04 0.48564063 ## 42 0.5288328 1.388993e-03 0.86498769 ## 43 0.5282295 3.084876e-03 1.33991634 ## 44 0.5282295 3.084876e-03 1.33991634 ## 45 0.5290689 5.807650e-04 0.58137864 ## 46 0.5285016 2.273479e-03 1.15028191 ## 47 0.5290689 5.807650e-04 0.58137864 ## 48 0.5285016 2.273479e-03 1.15028191 ## 49 0.5289213 1.021423e-03 0.77101306 ## 50 0.5290413 6.758060e-04 -0.62123932 ## 51 0.5292618 4.791188e-06 -0.05230817 ## 52 0.5292525 3.302708e-05 0.13733555 ## 53 0.5292019 1.872167e-04 0.32697927 ## 54 0.5283271 2.847471e-03 1.27519786 ## 55 0.5285850 2.063513e-03 1.08555414 ## 56 0.5291098 4.673601e-04 0.51662298 ## 57 0.5283271 2.847471e-03 1.27519786 ## 58 0.5289763 8.734572e-04 0.70626670 ## 59 0.5283271 2.847471e-03 1.27519786 ## 60 0.5283122 2.245181e-03 -1.28526102 ## 61 0.5285722 1.631828e-03 -1.09572824 ## 62 0.5292624 2.337244e-06 0.04146844 ## 63 0.5292223 8.937237e-05 -0.26727957 ## 64 0.5292599 7.567956e-06 -0.07777741 ## 65 0.5288280 9.463331e-04 0.86973343 ## 66 0.5288280 9.463331e-04 0.86973343 ## 67 0.5281277 2.467101e-03 -1.40429257 ## 68 0.5291978 1.549052e-04 -0.33759712 ## 69 0.5292508 2.979680e-05 -0.14806434 ## 70 0.5291035 3.776626e-04 -0.52712990 ## 71 0.5291035 3.776626e-04 -0.52712990 ## 72 0.5289678 6.980688e-04 -0.71666268 ## 73 0.5292327 7.252658e-05 0.23100122 ## 74 0.5287908 1.116124e-03 -0.90619546 ## 75 0.5282021 2.335115e-03 -1.35752997 ## 76 0.5292628 1.216342e-06 -0.03098298 ## 77 0.5282021 2.335115e-03 -1.35752997 ## 78 0.5284779 1.728671e-03 -1.16802326 ## 79 0.5259917 1.101880e-02 -2.38107770 ## 80 0.5277472 5.114951e-03 -1.62228504 ## 81 0.5277472 5.114951e-03 -1.62228504 ## 82 0.5273712 6.381099e-03 -1.81198320 ## 83 0.5289785 6.188637e-04 0.70355775 ## 84 0.5292617 3.706735e-06 -0.05445002 ## 85 0.5292529 2.280327e-05 0.13505192 ## 86 0.5292028 1.316949e-04 0.32455386 ## 87 0.5292291 7.440535e-05 -0.24395196 ## 88 0.5289785 6.188637e-04 0.70355775 ## 89 0.5288095 2.530195e-03 0.88807906 ## 90 0.5276981 8.716136e-03 1.64830140 ## 91 0.5289830 1.563115e-03 0.69802348 ## 92 0.5290333 1.282884e-03 -0.63236562 ## 93 0.5289830 1.563115e-03 0.69802348 ## 94 0.5291433 2.610293e-04 -0.45678174 ## 95 0.5292599 7.567956e-06 -0.07777741 ## 96 0.5292223 8.937237e-05 -0.26727957 ## 97 0.5292223 8.937237e-05 -0.26727957 ## 98 0.5292112 1.135166e-04 0.30122693 ## 99 0.5292562 1.561603e-05 0.11172476 ## 100 0.5292112 1.135166e-04 0.30122693 ## 101 0.5292112 1.135166e-04 0.30122693 ## 102 0.5278315 4.354926e-03 1.57659870 ## 103 0.5274657 5.465617e-03 1.76624268 ## 104 0.5290362 6.918014e-04 0.62837877 ## 105 0.5281556 3.370257e-03 1.38695471 ## 106 0.5278315 4.354926e-03 1.57659870 ## 107 0.5291526 3.372427e-04 0.43873479 ## 108 0.5274657 5.465617e-03 1.76624268 ## 109 0.5284380 2.511610e-03 1.19731073 ## 110 0.5288783 1.172382e-03 0.81802276 ## 111 0.5285939 2.037567e-03 -1.07841710 ## 112 0.5292045 1.792285e-04 -0.31984116 ## 113 0.5292536 2.969898e-05 -0.13019717 ## 114 0.5292536 2.969898e-05 -0.13019717 ## 115 0.5289821 8.563538e-04 -0.69912913 ## 116 0.5283375 2.817207e-03 -1.26806108 ## 117 0.5280395 3.722869e-03 -1.45770507 ## 118 0.5291140 4.547801e-04 -0.50948514 ## 119 0.5292045 1.792285e-04 -0.31984116 ## 120 0.5283375 2.817207e-03 -1.26806108 ## 121 0.5289227 1.022897e-03 -0.76941699 ## 122 0.5292633 2.040983e-07 -0.01086840 ## 123 0.5289227 1.022897e-03 -0.76941699 ## 124 0.5285038 2.279897e-03 -1.14869129 ## 125 0.5285038 2.279897e-03 -1.14869129 ## 126 0.5290842 5.380764e-04 0.55804305 ## 127 0.5286181 3.119091e-03 -1.05873865 ## 128 0.5292119 1.557483e-04 0.29926398 ## 129 0.5288587 1.222929e-03 -0.83857793 ## 130 0.5286192 1.945997e-03 1.05782526 ## 131 0.5291420 3.668615e-04 -0.45929729 ## 132 0.5286548 1.838590e-03 -1.02821825 ## 133 0.5291113 3.303818e-04 0.51405581 ## 134 0.5291113 3.303818e-04 0.51405581 ## 135 0.5285888 1.465213e-03 1.08256163 ## 136 0.5285888 1.465213e-03 1.08256163 ## 137 0.5288044 9.971408e-04 0.89305969 ## 138 0.5291113 3.303818e-04 0.51405581 ## 139 0.5292529 2.280327e-05 0.13505192 ## 140 0.5287982 4.005182e-03 0.89901235 ## 141 0.5291546 9.369626e-04 -0.43482615 ## 142 0.5291840 1.796615e-04 0.37151804 ## 143 0.5292443 4.311669e-05 0.18200154 ## 144 0.5285091 1.705349e-03 -1.14461395 ## 145 0.5290823 4.097080e-04 0.56103454 ## 146 0.5292443 4.311669e-05 0.18200154 ## 147 0.5292327 7.252658e-05 0.23100122 ## 148 0.5288954 8.689880e-04 0.79959956 ## 149 0.5287002 1.329773e-03 0.98913234 ## 150 0.5280106 2.956183e-03 -1.47479381 ## 151 0.5287908 1.116124e-03 -0.90619546 ## 152 0.5283122 2.245181e-03 -1.28526102 ## 153 0.5292508 2.979680e-05 -0.14806434 ## 154 0.5286548 1.838590e-03 -1.02821825 ## 155 0.5291259 4.156828e-04 0.48890430 ## 156 0.5288587 1.222929e-03 -0.83857793 ## 157 0.5292119 1.557483e-04 0.29926398 ## 158 0.5288296 1.310807e-03 0.86818494 ## 159 0.5288296 1.310807e-03 0.86818494 ## 160 0.5292597 1.113461e-05 -0.08001665 ## 161 0.5286548 1.838590e-03 -1.02821825 ## 162 0.5222837 2.169940e-02 -3.47168193 ## 163 0.5292562 1.561603e-05 0.11172476 ## 164 0.5292112 1.135166e-04 0.30122693 ## 165 0.5284137 1.846182e-03 -1.21479041 ## 166 0.5292112 1.135166e-04 0.30122693 ## 167 0.5290230 5.225386e-04 -0.64628391 ## 168 0.5288614 8.739005e-04 -0.83578607 ## 169 0.5278000 3.177873e-03 -1.59379474 ## 170 0.5279939 4.491802e-03 1.48459698 ## 171 0.5292627 2.246655e-06 -0.03320217 ## 172 0.5275173 6.175390e-03 -1.74072622 ## 173 0.5292297 1.025091e-04 -0.24195189 ## 174 0.5288850 1.151385e-03 -0.81088304 ## 175 0.5285850 2.063513e-03 1.08555414 ## 176 0.5289763 8.734572e-04 0.70626670 ## 177 0.5292525 3.302708e-05 0.13733555 ## 178 0.5292277 1.087061e-04 0.24909080 ## 179 0.5292536 2.969898e-05 -0.13019717 ## 180 0.5290362 6.918014e-04 0.62837877 ## 181 0.5292045 1.792285e-04 -0.31984116 ## 182 0.5290362 6.918014e-04 0.62837877 ## 183 0.5268952 7.195987e-03 -2.02663702 ## 184 0.5285939 2.037567e-03 -1.07841710 ## 185 0.5259228 1.014151e-02 -2.40592499 ## 186 0.5286789 1.778985e-03 1.00766674 ## 187 0.5291526 3.372427e-04 0.43873479 ## 188 0.5283375 2.817207e-03 -1.26806108 ## 189 0.5268952 7.195987e-03 -2.02663702 ## 190 0.5292277 1.087061e-04 0.24909080 ## 191 0.5291743 3.730164e-04 0.39345625 ## 192 0.5292633 4.586295e-07 0.01379633 ## 193 0.5287297 2.234287e-03 0.96294613 ## 194 0.5290382 1.095908e-03 -0.62556534 ## 195 0.5281582 5.374444e-03 -1.38532710 ## 196 0.5291542 5.314400e-04 -0.43562490 ## 197 0.5291542 5.314400e-04 -0.43562490 ## 198 0.5291864 3.225324e-04 -0.36586359 ## 199 0.5279590 5.456543e-03 -1.50484335 ## 200 0.5292456 7.466659e-05 -0.17603363 ## 201 0.5290857 7.440562e-04 -0.55569355 ## 202 0.5292395 9.990856e-05 0.20362629 ## 203 0.5292456 7.466659e-05 -0.17603363 ## 204 0.5290857 7.440562e-04 -0.55569355 ## 205 0.5279590 5.456543e-03 -1.50484335 ## 206 0.5292597 1.113461e-05 -0.08001665 ## 207 0.5286192 1.945997e-03 1.05782526 ## 208 0.5291259 4.156828e-04 0.48890430 ## 209 0.5284980 1.799448e-03 1.15300255 ## 210 0.5284980 1.799448e-03 1.15300255 ## 211 0.5289187 8.107622e-04 0.77394015 ## 212 0.5289187 8.107622e-04 0.77394015 ## 213 0.5284980 1.799448e-03 1.15300255 ## 214 0.5291737 2.110591e-04 0.39487775 ## 215 0.5287291 1.256482e-03 0.96347135 ## 216 0.5287291 1.256482e-03 0.96347135 ## 217 0.5268530 1.015025e-02 -2.04460846 ## 218 0.5291937 1.534772e-04 0.34803045 ## 219 0.5292489 3.184183e-05 0.15852373 ## 220 0.5282969 2.126802e-03 1.29556401 ## 221 0.5291937 1.534772e-04 0.34803045 ## 222 0.5287799 1.064443e-03 0.91655059 ## 223 0.5292624 2.337244e-06 0.04146844 ## 224 0.5287002 1.329773e-03 0.98913234 ## 225 0.5291616 2.403648e-04 0.42053400 ## 226 0.5288954 8.689880e-04 0.79959956 ## 227 0.5269374 2.291960e-02 -2.00855856 ## 228 0.5290112 2.489575e-03 0.66197801 ## 229 0.5291356 1.261524e-03 0.47122540 ## 230 0.5280753 1.172003e-02 -1.43630072 ## 231 0.5289282 1.673629e-03 -0.76314553 ## 232 0.5290743 9.441332e-04 -0.57318439 ## 233 0.5289282 1.673629e-03 -0.76314553 ## 234 0.5291616 2.403648e-04 0.42053400 ## 235 0.5290492 5.058519e-04 0.61006678 ## 236 0.5287002 1.329773e-03 0.98913234 ## 237 0.5290492 5.058519e-04 0.61006678 ## 238 0.5288206 2.830546e-03 0.87712032 ## 239 0.5255203 2.385404e-02 -2.54627361 ## 240 0.5281263 7.264279e-03 -1.40514230 ## 241 0.5289431 9.563845e-04 -0.74606234 ## 242 0.5292275 1.205719e-04 -0.24959384 ## 243 0.5292275 1.205719e-04 -0.24959384 ## 244 0.5278299 4.816127e-03 -1.57746500 ## 245 0.5289014 1.527476e-03 0.79315365 ## 246 0.5291650 4.151218e-04 0.41348326 ## 247 0.5281941 4.508522e-03 1.36265924 ## 248 0.5292494 5.910622e-05 -0.15602232 ## 249 0.5289605 1.278109e-03 -0.72552791 ## 250 0.5285602 2.965788e-03 -1.10519830 ## 251 0.5289014 1.527476e-03 0.79315365 ## 252 0.5291388 3.498993e-04 0.46533380 ## 253 0.5292572 1.730408e-05 -0.10348259 ## 254 0.5286269 1.786655e-03 -1.05150990 ## 255 0.5283765 2.489076e-03 -1.24111536 ## 256 0.5292572 1.730408e-05 -0.10348259 ## 257 0.5290033 7.303631e-04 -0.67229898 ## 258 0.5292140 1.388066e-04 -0.29308805 ## 259 0.5284011 2.419933e-03 1.22375565 ## 260 0.5292591 1.198536e-05 0.08612287 ## 261 0.5288529 1.152549e-03 0.84454472 ## 262 0.5292196 1.228504e-04 0.27572834 ## 263 0.5284011 2.419933e-03 1.22375565 ## 264 0.5290033 7.303631e-04 -0.67229898 ## 265 0.5290511 7.960903e-04 0.60744315 ## 266 0.5281881 4.028620e-03 1.36647792 ## 267 0.5281881 4.028620e-03 1.36647792 ## 268 0.5284662 2.987423e-03 1.17671923 ## 269 0.5287027 2.101603e-03 0.98696053 ## 270 0.5288976 1.371159e-03 0.79720184 ## 271 0.5292601 1.088335e-05 -0.07580968 ## 272 0.5292559 2.455644e-05 0.11387440 ## 273 0.5292104 1.745010e-04 0.30355848 ## 274 0.5292228 1.334817e-04 -0.26549375 ## 275 0.5285871 3.290078e-03 1.08389861 ## 276 0.5289782 1.388021e-03 0.70401773 ## 277 0.5283291 4.544206e-03 1.27383905 ## 278 0.5285871 3.290078e-03 1.08389861 ## 279 0.5285871 3.290078e-03 1.08389861 ## 280 0.5289782 1.388021e-03 0.70401773 ## 281 0.5288034 2.238016e-03 0.89395817 ## 282 0.5291539 8.736402e-04 0.43620609 ## 283 0.5292529 8.384186e-05 -0.13513127 ## 284 0.5292286 2.773145e-04 0.24576030 ## 285 0.5291539 8.736402e-04 0.43620609 ## 286 0.5292286 2.773145e-04 0.24576030 ## 287 0.5291539 8.736402e-04 0.43620609 ## 288 0.5275245 9.428393e-03 -1.73712333 ## 289 0.5290584 1.113334e-03 -0.59693155 ## 290 0.5278846 7.478393e-03 -1.54709137 ## 291 0.5291875 1.786006e-04 -0.36324704 ## 292 0.5290876 4.136001e-04 -0.55277824 ## 293 0.5289463 7.458452e-04 -0.74230943 ## 294 0.5291840 1.796615e-04 0.37151804 ## 295 0.5287547 1.150306e-03 0.94006754 ## 296 0.5289238 1.442023e-03 -0.76819428 ## 297 0.5292407 9.645367e-05 -0.19867552 ## 298 0.5292403 9.135983e-05 0.20023518 ## 299 0.5282309 4.085367e-03 1.33899287 ## 300 0.5290699 7.660595e-04 0.57982107 ## 301 0.5289225 1.349648e-03 0.76961402 ## 302 0.5287336 2.097395e-03 0.95940697 ## 303 0.5276027 6.567401e-03 -1.69769430 ## 304 0.5290836 7.118683e-04 -0.55893661 ## 305 0.5287336 2.097395e-03 0.95940697 ## 306 0.5272099 8.117879e-03 -1.88748725 ## 307 0.5285031 3.009302e-03 1.14919992 ## 308 0.5287636 1.175336e-03 -0.93184063 ## 309 0.5285395 1.702072e-03 -1.12137183 ## 310 0.5279130 5.031245e-03 -1.53110299 ## 311 0.5282273 3.861440e-03 -1.34134750 ## 312 0.5267194 9.467996e-03 -2.10036947 ## 313 0.5292493 4.992962e-05 0.15652272 ## 314 0.5292104 1.745010e-04 0.30355848 ## 315 0.5289444 1.270234e-03 -0.74451606 ## 316 0.5287610 2.000415e-03 -0.93431267 ## 317 0.5286815 2.831044e-03 -1.00544622 ## 318 0.5291542 5.314400e-04 -0.43562490 ## 319 0.5286815 2.831044e-03 -1.00544622 ## 320 0.5285642 4.188699e-03 1.10206485 ## 321 0.5292333 1.805411e-04 -0.22879982 ## 322 0.5292625 5.158870e-06 -0.03867630 ## 323 0.5283019 5.758594e-03 1.29218838 ## 324 0.5285642 4.188699e-03 1.10206485 ## 325 0.5291007 9.749644e-04 0.53169428 ## 326 0.5292502 7.910200e-05 0.15144723 ## 327 0.5290857 7.440562e-04 -0.55569355 ## 328 0.5285345 3.050575e-03 -1.12518343 ## 329 0.5252269 1.684224e-02 -2.64382312 ## 330 0.5292456 7.466659e-05 -0.17603363 ## 331 0.5287297 2.234287e-03 0.96294613 ## 332 0.5291864 3.225324e-04 -0.36586359 ## 333 0.5292584 3.435915e-05 0.09285536 ## 334 0.5280868 8.141444e-03 -1.42934322 ## 335 0.5236188 3.285786e-02 -3.12400472 ## 336 0.5274101 1.082736e-02 -1.79330205 ## 337 0.5242881 2.898063e-02 -2.93390434 ## 338 0.5266121 8.276546e-03 -2.14407821 ## 339 0.5290507 5.261084e-04 0.60794388 ## 340 0.5284664 1.970623e-03 1.17659685 ## 341 0.5281886 2.656707e-03 1.36614784 ## 342 0.5290507 5.261084e-04 0.60794388 ## 343 0.5288974 9.053240e-04 0.79749487 ## 344 0.5290507 5.261084e-04 0.60794388 ## 345 0.5281886 2.656707e-03 1.36614784 ## 346 0.5291627 2.491821e-04 0.41839289 ## 347 0.5288974 9.053240e-04 0.79749487 ## 348 0.5288754 1.018755e-03 0.82107625 ## 349 0.5278264 3.769430e-03 1.57937948 ## 350 0.5281510 2.918836e-03 1.38980367 ## 351 0.5288754 1.018755e-03 0.82107625 ## 352 0.5284340 2.176858e-03 1.20022786 ## 353 0.5281510 2.918836e-03 1.38980367 ## 354 0.5281510 2.918836e-03 1.38980367 ## 355 0.5281510 2.918836e-03 1.38980367 ## 356 0.5278264 3.769430e-03 1.57937948 ## 357 0.5290339 6.026291e-04 0.63150044 ## 358 0.5277570 5.368689e-03 -1.61701662 ## 359 0.5286314 2.254343e-03 -1.04782901 ## 360 0.5288396 1.511871e-03 -0.85809980 ## 361 0.5288396 1.511871e-03 -0.85809980 ## 362 0.5292154 1.713843e-04 -0.28891219 ## 363 0.5291316 4.703918e-04 -0.47864139 ## 364 0.5288924 1.565310e-03 0.80291938 ## 365 0.5288924 1.565310e-03 0.80291938 ## 366 0.5288924 1.565310e-03 0.80291938 ## 367 0.5288924 1.565310e-03 0.80291938 ## 368 0.5286961 2.392986e-03 0.99275457 ## 369 0.5292611 5.954547e-06 0.06277302 ## 370 0.5290339 6.026291e-04 0.63150044 ## 371 0.5291161 3.868348e-04 -0.50595441 ## 372 0.5291510 2.951204e-04 0.44192463 ## 373 0.5292281 1.102868e-04 -0.24750146 ## 374 0.5286807 1.822544e-03 -1.00613216 ## 375 0.5290006 8.199620e-04 -0.67582793 ## 376 0.5254428 1.187942e-02 -2.57239007 ## 377 0.5285943 3.729038e-03 1.07813465 ## 378 0.5288095 2.530195e-03 0.88807906 ## 379 0.5292612 1.241125e-05 -0.06219886 ## 380 0.5290333 1.282884e-03 -0.63236562 ## 381 0.5288095 2.530195e-03 0.88807906 ## 382 0.5288095 2.530195e-03 0.88807906 ## 383 0.5288280 9.463331e-04 0.86973343 ## 384 0.5291248 3.012696e-04 0.49072909 ## 385 0.5292223 8.937237e-05 -0.26727957 ## 386 0.5286583 1.315115e-03 -1.02528824 ## 387 0.5292119 1.557483e-04 0.29926398 ## 388 0.5284094 2.579337e-03 -1.21785856 ## 389 0.5288587 1.222929e-03 -0.83857793 ## 390 0.5291657 4.434341e-04 -0.41198756 ## 391 0.5291657 4.434341e-04 -0.41198756 ## 392 0.5266691 1.175253e-02 -2.12097358 ## 393 0.5290972 7.546327e-04 0.53744911 ## 394 0.5284340 2.176858e-03 1.20022786 ## 395 0.5292611 5.954547e-06 0.06277302 ## 396 0.5281510 2.918836e-03 1.38980367 ## 397 0.5284340 2.176858e-03 1.20022786 ## 398 0.5284417 2.683114e-03 -1.19462491 ## 399 0.5286821 1.898718e-03 -1.00494469 ## 400 0.5291123 4.938088e-04 0.51249707 ## 401 0.5281598 3.602796e-03 -1.38430514 ## 402 0.5291541 3.572382e-04 -0.43590403 ## 403 0.5290382 7.357795e-04 -0.62558425 ## 404 0.5283329 3.038198e-03 1.27121795 ## 405 0.5288056 1.495433e-03 0.89185751 ## 406 0.5276937 5.122104e-03 1.65057839 ## 407 0.5290382 7.357795e-04 -0.62558425 ## 408 0.5292532 3.332508e-05 0.13313663 ## 409 0.5274184 9.625306e-03 -1.78927820 ## 410 0.5288271 2.279285e-03 0.87070277 ## 411 0.5289968 1.393078e-03 0.68070413 ## 412 0.5289968 1.393078e-03 0.68070413 ## 413 0.5291420 6.342032e-04 -0.45928772 ## 414 0.5292573 3.406866e-05 -0.10271288 ## 415 0.5283955 4.867462e-03 1.22771803 ## 416 0.5291377 7.056935e-04 0.46747180 ## 417 0.5286436 3.477062e-03 1.03765647 ## 418 0.5288348 2.404837e-03 -0.86295911 ## 419 0.5290759 1.756864e-03 0.57089354 ## 420 0.5287415 4.887832e-03 0.95223517 ## 421 0.5287415 4.887832e-03 0.95223517 ## 422 0.5289296 3.126375e-03 0.76156436 ## 423 0.5292427 1.936797e-04 0.18955192 ## 424 0.5287415 4.887832e-03 0.95223517 ## 425 0.5290759 1.756864e-03 0.57089354 ## 426 0.5289296 3.126375e-03 0.76156436 ## 427 0.5289296 3.126375e-03 0.76156436 ## 428 0.5285084 7.068836e-03 -1.14514378 ## 429 0.5285084 7.068836e-03 -1.14514378 ## 430 0.5292182 1.612907e-04 0.28027543 ## 431 0.5292182 1.612907e-04 0.28027543 ## 432 0.5280375 6.070293e-03 -1.45892750 ## 433 0.5273146 9.643372e-03 -1.83883748 ## 434 0.5259151 1.654659e-02 -2.40870244 ## 435 0.5273146 9.643372e-03 -1.83883748 ## 436 0.5292272 1.792000e-04 0.25066739 ## 437 0.5283362 4.592473e-03 -1.26897251 ## 438 0.5268902 1.173863e-02 -2.02879247 ## 439 0.5270505 1.902192e-02 -1.95921301 ## 440 0.5281546 9.541123e-03 -1.38756794 ## 441 0.5284384 7.100574e-03 -1.19701958 ## 442 0.5285985 1.745270e-03 -1.07468183 ## 443 0.5292267 9.622886e-05 0.25234883 ## 444 0.5292626 2.232252e-06 0.03596002 ## 445 0.5278740 4.163669e-03 1.55305357 ## 446 0.5281929 3.208933e-03 1.36341687 ## 447 0.5289821 8.563538e-04 -0.69912913 ## 448 0.5292045 1.792285e-04 -0.31984116 ## 449 0.5288783 1.172382e-03 0.81802276 ## 450 0.5288088 1.383950e-03 -0.88877311 ## 451 0.5290362 6.918014e-04 0.62837877 ## 452 0.5283375 2.817207e-03 -1.26806108 ## 453 0.5290362 6.918014e-04 0.62837877 ## 454 0.5292521 6.634682e-05 0.13985930 ## 455 0.5292521 6.634682e-05 0.13985930 ## 456 0.5292521 6.634682e-05 0.13985930 ## 457 0.5292007 3.692987e-04 0.32996676 ## 458 0.5290418 1.306230e-03 -0.62057053 ## 459 0.5292521 6.634682e-05 0.13985930 ## 460 0.5284075 2.769322e-03 -1.21924997 ## 461 0.5291265 4.433246e-04 0.48782825 ## 462 0.5291265 4.433246e-04 0.48782825 ## 463 0.5292596 1.228221e-05 -0.08119782 ``` --- Join up the fitted values to plot the parallel lines model ```r ggplot(evals, aes(x = bty_avg, y = score, colour = gender)) + geom_point(alpha = 0.5) + theme_bw() + geom_line(data = augment(parallel_lines), aes(y = .fitted, colour = gender)) ``` <!-- --> --- ##Lines for each gender that aren't parallel .midi[ Add an independent variable to the model that is the product of `male` and `bty_avg`. This is called an **interaction term**. **Model 2:** `$$score_i = \beta_0 + \beta_1 \, male + \beta_2 \, bty\_avg_i + \beta_3 \, (male \times bty\_avg)_i + \epsilon_i$$` Model 2 for male professors: `$$score_i = \beta_0 + \beta_1 + \beta_2 \, bty\_avg_i + \beta_3 \, bty\_avg_i + \epsilon_i$$` `$$score_i = (\beta_0 + \beta_1) + (\beta_2 + \beta_3) \, bty\_avg_i + \epsilon_i$$` Model 2 for female professors: `$$score_i = \beta_0 + \beta_2 \, bty\_avg_i + \epsilon_i$$` ] --- ##Plot of non-parallel lines ```r ggplot(evals, aes(x = bty_avg, y = score, colour = gender)) + geom_point(alpha = 0.5) + theme_bw() + geom_smooth(method = lm, fill = NA) ``` <!-- --> --- ##Fitted lines for male and female professors Including the term `bty_avg*gender` on the right-side of the model specification in `lm` includes the interaction term plus both of the variables in the model. ```r summary(lm(score ~ bty_avg*gender, data=evals))$coefficients ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 3.95005984 0.11799986 33.475124 2.920267e-125 ## bty_avg 0.03064259 0.02400361 1.276582 2.023952e-01 ## gendermale -0.18350903 0.15349459 -1.195541 2.324931e-01 ## bty_avg:gendermale 0.07961855 0.03246948 2.452105 1.457376e-02 ``` *What are the fitted lines for male and for female professors?* --- ##Could the difference in the slopes for male and female professors just be due to chance? Model: `$$score = \beta_0 + \beta_1 \, male + \beta_2 \, bty\_avg + \beta_3 \, (male \times bty\_avg) + \epsilon$$` *What would be appropriate hypotheses to test?* <br> <br> <br> *What do you conclude?* --- ## Example: eBay auctions of *Mario Kart* * Items can be sold on [ebay.com](http://www.ebay.com/) through an auction. * The person who bids the highest price before the auction ends purchases the item. * The `marioKart` dataset in the `openintro` package includes eBay sales of the game *Mario Kart* for Nintendo Wii in October 2009. * Do longer auctions (`duration`, in days) result in higher prices (`totalPr`)? --- ```r library(openintro) glimpse(marioKart) ``` ``` ## Observations: 143 ## Variables: 12 ## $ ID <dbl> 150377422259, 260483376854, 320432342985, 280405224... ## $ duration <int> 3, 7, 3, 3, 1, 3, 1, 1, 3, 7, 1, 1, 1, 1, 7, 7, 3, ... ## $ nBids <int> 20, 13, 16, 18, 20, 19, 13, 15, 29, 8, 15, 15, 13, ... ## $ cond <fct> new, used, new, new, new, new, used, new, used, use... ## $ startPr <dbl> 0.99, 0.99, 0.99, 0.99, 0.01, 0.99, 0.01, 1.00, 0.9... ## $ shipPr <dbl> 4.00, 3.99, 3.50, 0.00, 0.00, 4.00, 0.00, 2.99, 4.0... ## $ totalPr <dbl> 51.55, 37.04, 45.50, 44.00, 71.00, 45.00, 37.02, 53... ## $ shipSp <fct> standard, firstClass, firstClass, standard, media, ... ## $ sellerRate <int> 1580, 365, 998, 7, 820, 270144, 7284, 4858, 27, 201... ## $ stockPhoto <fct> yes, yes, no, yes, yes, yes, yes, yes, yes, no, yes... ## $ wheels <int> 1, 1, 1, 1, 2, 0, 0, 2, 1, 1, 2, 2, 2, 2, 1, 0, 1, ... ## $ title <fct> ~~ Wii MARIO KART & WHEEL ~ NINTENDO Wii ~ BRAN... ``` --- ```r ggplot(marioKart, aes(x=duration, y=totalPr)) + geom_point() + theme_bw() ``` <!-- --> --- ## What should we do with the two outlying values of `totalPr`? * Remove outliers only if there is a good reason. * In these two auctions, and only these two auctions, the game was sold with other items. ```r # create a data set without the outliers marioKart2 <- marioKart %>% filter(totalPr < 100) ``` --- ```r ggplot(marioKart2, aes(x=duration, y=totalPr)) + geom_point() + theme_bw() ``` <!-- --> --- ```r ggplot(marioKart2, aes(x = duration, y = totalPr)) + geom_point() + theme_bw() + geom_smooth(method = "lm") ``` <!-- --> There appears to be a negative relationship between `totalPr` and `duration`. That is, the longer an item is on auction, the lower the price. *Does this make sense?* --- Maybe there actually isn't a relationship. We can investigate if the data are consistent with a slope of 0. ```r summary(lm(totalPr ~ duration, data=marioKart2))$coefficients ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 52.373584 1.2607560 41.541411 3.010309e-80 ## duration -1.317156 0.2769021 -4.756756 4.866701e-06 ``` We have strong evidence that the slope is not 0. There must be something else affecting the relationship ... --- Consider the role of `cond`. `cond` is a categorical variable for the game's condition, either `new` or `used`. ```r ggplot(marioKart2, aes(x=duration, y=totalPr, color=cond)) + geom_point() + theme_bw() ``` <!-- --> New games, which are more desirable, were mostly sold in one-day auctions. --- ```r ggplot(marioKart2, aes(x=duration, y=totalPr, color=cond)) + geom_point() + geom_smooth(method="lm", fill=NA) + theme_bw() ``` <!-- --> - Considering `cond` changes the nature of the relationship between `totalPr` and `duration`. - This is an example of **Simpson's Paradox** in which the nature of a relationship that we see in all observations changes when we look at sub-groups. --- ## The fitted lines .small[ ```r summary(lm(totalPr ~ duration, data = marioKart2))$coefficients ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 52.373584 1.2607560 41.541411 3.010309e-80 ## duration -1.317156 0.2769021 -4.756756 4.866701e-06 ``` ```r marioKart2_used <- marioKart2 %>% filter(cond == "used") summary(lm(totalPr ~ duration, data = marioKart2_used))$coefficients ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 41.1463022 1.7924487 22.955358 5.976630e-37 ## duration 0.3589676 0.3329894 1.078015 2.842669e-01 ``` ```r marioKart2_new <- marioKart2 %>% filter(cond == "new") summary(lm(totalPr ~ duration, data = marioKart2_new))$coefficients ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 58.268226 1.2497467 46.624029 4.353419e-47 ## duration -1.965595 0.4104444 -4.788944 1.233340e-05 ``` ```r summary(lm(totalPr ~ duration*cond, data = marioKart2))$coefficients ``` ``` ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 58.268226 1.3664729 42.641332 5.832075e-81 ## duration -1.965595 0.4487799 -4.379865 2.341705e-05 ## condused -17.121924 2.1782581 -7.860374 1.013608e-12 ## duration:condused 2.324563 0.5483731 4.239016 4.101561e-05 ``` ] --- # An example of a variable affecting a relationship between two variables in a non-regression setting: <br> Data in two-way tables --- ## A Classic Example: Treatment for kidney stones Source of data: *British Medical Journal (Clinical Research Edition)* March 29, 1986 - Observations are patients being treated for kidney stones. - `treatment` is one of 2 treatments (`A` or `B`) - `outcome` is `success` or `failure` of the treatment ```r kidney_stones %>% count(treatment, outcome) ``` ``` ## # A tibble: 4 x 3 ## treatment outcome n ## <chr> <chr> <int> ## 1 A failure 77 ## 2 A success 273 ## 3 B failure 61 ## 4 B success 289 ``` *What would make it easier to decide which treatment is better?* --- ## Describing Two-Way Tables .small[ - The (2x2) *contingency table* below shows counts of patients being treated for kidney stones. ```r tab <- table(kidney_stones$outcome, kidney_stones$treatment, deparse.level = 2) addmargins(tab) ``` ``` ## kidney_stones$treatment ## kidney_stones$outcome A B Sum ## failure 77 61 138 ## success 273 289 562 ## Sum 350 350 700 ``` - Proportion of observations in each cell of contingency table. ```r prop.table(tab) ``` ``` ## kidney_stones$treatment ## kidney_stones$outcome A B ## failure 0.11000000 0.08714286 ## success 0.39000000 0.41285714 ``` - **Joint, marginal, and conditional distributions**. ```r addmargins(prop.table(tab)) ``` ``` ## kidney_stones$treatment ## kidney_stones$outcome A B Sum ## failure 0.11000000 0.08714286 0.19714286 ## success 0.39000000 0.41285714 0.80285714 ## Sum 0.50000000 0.50000000 1.00000000 ``` ] --- ## Some vocabulary *Recall:* The distribution of a variable is the pattern of values in the data for that variable, showing the frequency or relative frequency (proportions) of the occurrence of the values relative to each other. We can also look at the **joint distribution** of two variables. If both variables are categorical, we can see their joint distribution in a **contingency table** showing the counts of observations in each way the data can be cross-classifed. A **marginal distribution** is the distribution of only one of the variables in a contingency table. A **conditional distribution** is the distribution of a variable within a fixed value of a second variable. What percentage of successes were Treatment A? <br> <br> What percentage of Treatment A surgeries resulted in a success? --- ## Some additional information - A is an invasive open surgery treatment - B is a new less invasive treatment - Doctors get to choose the treatment, depending on the patient - What might influence how a doctor chooses a treatment for their patient? --- ## Kidney stones come in various sizes ```r kidney_stones %>% count(size, treatment, outcome) %>% group_by(size, treatment) %>% mutate(per_success = n / sum(n)) #%>% ``` ``` ## # A tibble: 8 x 5 ## # Groups: size, treatment [4] ## size treatment outcome n per_success ## <chr> <chr> <chr> <int> <dbl> ## 1 large A failure 71 0.270 ## 2 large A success 192 0.730 ## 3 large B failure 25 0.312 ## 4 large B success 55 0.688 ## 5 small A failure 6 0.0690 ## 6 small A success 81 0.931 ## 7 small B failure 36 0.133 ## 8 small B success 234 0.867 ``` ```r #filter(outcome=="success") ``` --- .small[ Column percentages (conditional distribution of success given treatment): ```r prop.table(table(kidney_stones$outcome, kidney_stones$treatment), margin = 2) ``` ``` ## ## A B ## failure 0.2200000 0.1742857 ## success 0.7800000 0.8257143 ``` ```r large <- kidney_stones %>% filter(size == "large") prop.table(table(large$outcome, large$treatment),margin = 2) ``` ``` ## ## A B ## failure 0.269962 0.312500 ## success 0.730038 0.687500 ``` ```r small <- kidney_stones %>% filter(size == "small") prop.table(table( small$outcome, small$treatment), margin = 2) ``` ``` ## ## A B ## failure 0.06896552 0.13333333 ## success 0.93103448 0.86666667 ``` ] *Which treatment is better?* --- This example is another case of **Simpson's paradox**. ### Moral of the story: Be careful drawing conclusions from data! It's important to understand how the data were collected and what other factors might have an affect. --- .small[ Visualizing the kidney stone data: treatment and outcome ```r ggplot(kidney_stones, aes(x=treatment, fill=outcome)) + geom_bar(position = "fill") + labs(y = "Proportion") + theme_bw() ``` <!-- --> Visualizing the kidney stone data: treatment and outcome by size ```r ggplot(kidney_stones, aes(x=treatment, fill=outcome)) + geom_bar(position = "fill") + labs(y = "Proportion") + facet_grid(. ~ size) + theme_bw() ``` <!-- --> ] --- # Confounding --- ## What is a **confounding variable**? * When examining the relationship between two variables in observational studies, it is important to consider the possible effects of other variables. -- * A third variable is a **confounding variable** if it affects the nature of the relationship between two other variables, so that it is impossible to know if one variable causes another, or if the observed relationship is due to the third variable. -- * The possible presence of confounding variables means we must be cautious when interpreting relationships. --- ## Examples of confounding? * A 2012 [study](http://www.pnas.org/content/early/2012/08/22/1206820109) showed that heavy use of marijuana in adolescence can negatively affect IQ. *Is it possible that there are other variables, such as socioeconomic status, that is associated with both marijuana use and IQ?* -- * Another 2012 [study](http://www.nejm.org/doi/full/10.1056/NEJMoa1112010) showed that coffee drinking was inversely related to mortality. *Should we all drink more coffee so we will live longer? Or is it possible that healthy people, who will live longer because they are healthy, are also more likely to drink coffee than unhealthy people?* -- * Many nutrition studies. *Are people who are likely to stick to a diet different than those who won't in important ways?* --- ## How can confounding be avoided? * Data can be collected through *experiments* or *observational studies*. -- * In **observational studies**, data are collected without intervention. The data are measurements of existing characteristics of the individuals being measured. -- * In **experiments**, an investigator imposes an intervention on the individuals being studied, randomly assigning some individuals to one treatment and randomly assigning other individuals to another treatment (sometimes this other treatment is a *control*). -- * Randomized experiments are often used when we want to be able to say a treatment **causes** a change in a measurement. -- * Other than the difference in treatment received, any differences between the individuals in the treatment and control groups are just due to random chance in their group assignment. --- ## How can confounding be avoided? * In a randomized experiment, if there is a difference in our measurement of interest, we *may* be able to conclude it was caused by the treatment, and not due to some other systematic difference that can confound our interpretation of the effect of the treatment. -- * Example experiment from Week 5 lecture: Students were randomly assigned to be sleep-deprived or to have unrestricted sleep and how they learned a visual discrimination task was compared between these two groups. -- * It's not always practical or ethical to carry out an experiment. For example, it would be considered unethical to randomly assign people to smoke marijuana.